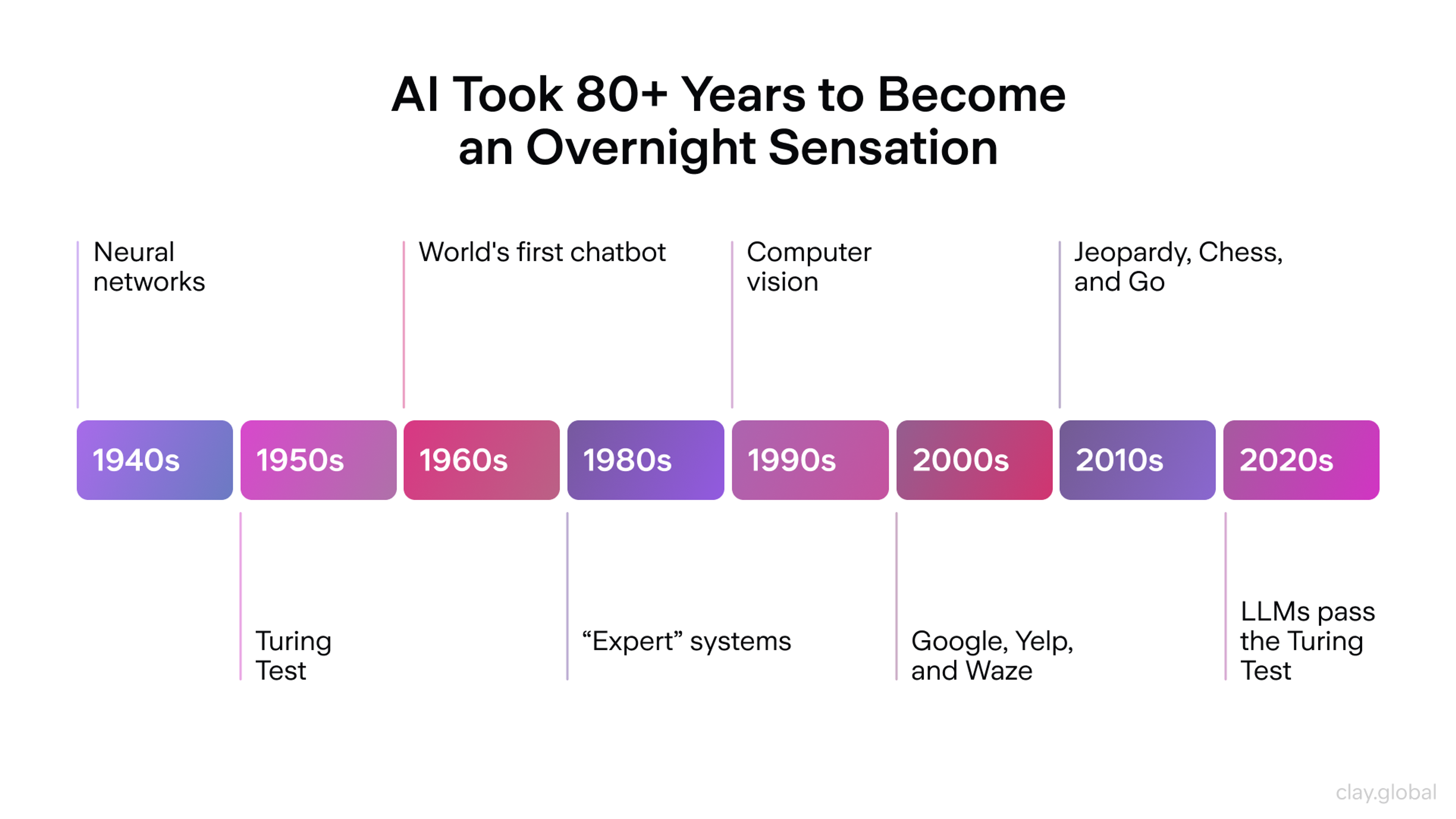

Artificial Intelligence (AI) is technology that helps machines think and learn like people. The AI timeline provides a chronological overview of AI's development, highlighting key milestones from the 1950s to the present. It solves problems, understands speech, and makes smart choices. Today, AI powers apps, supports doctors, and even drives cars.

This article explores the history of AI by tracing its major milestones and development from early ideas to today’s powerful systems. AI is now part of everyday life, helping with entertainment, safety, and communication.

This progress took years of research and teamwork. AI has changed how we live, work, and use technology.

AI Timeline by Clay

The Dreamers Who Started It All (1930s-1940s)

Before anyone built smart machines, a few brilliant minds laid the foundation. AI began in early philosophical and scientific thought, where thinkers pondered the possibility of creating intelligence outside the human mind. The concept of artificial humans — human-like machines or beings — also inspired these early dreamers.

They asked big questions: Can machines think? How do we turn ideas into instructions? What makes intelligence possible?

These foundational ideas were created by philosophers, mathematicians, and computer scientists.

Alan Turing: The Father of Computer Science

In 1936, Alan Turing shared an idea that changed how we understand machines. He described a simple, imaginary machine that could solve problems by following clear steps. This “Turing Machine” proved that machines could complete complex tasks using basic instructions.

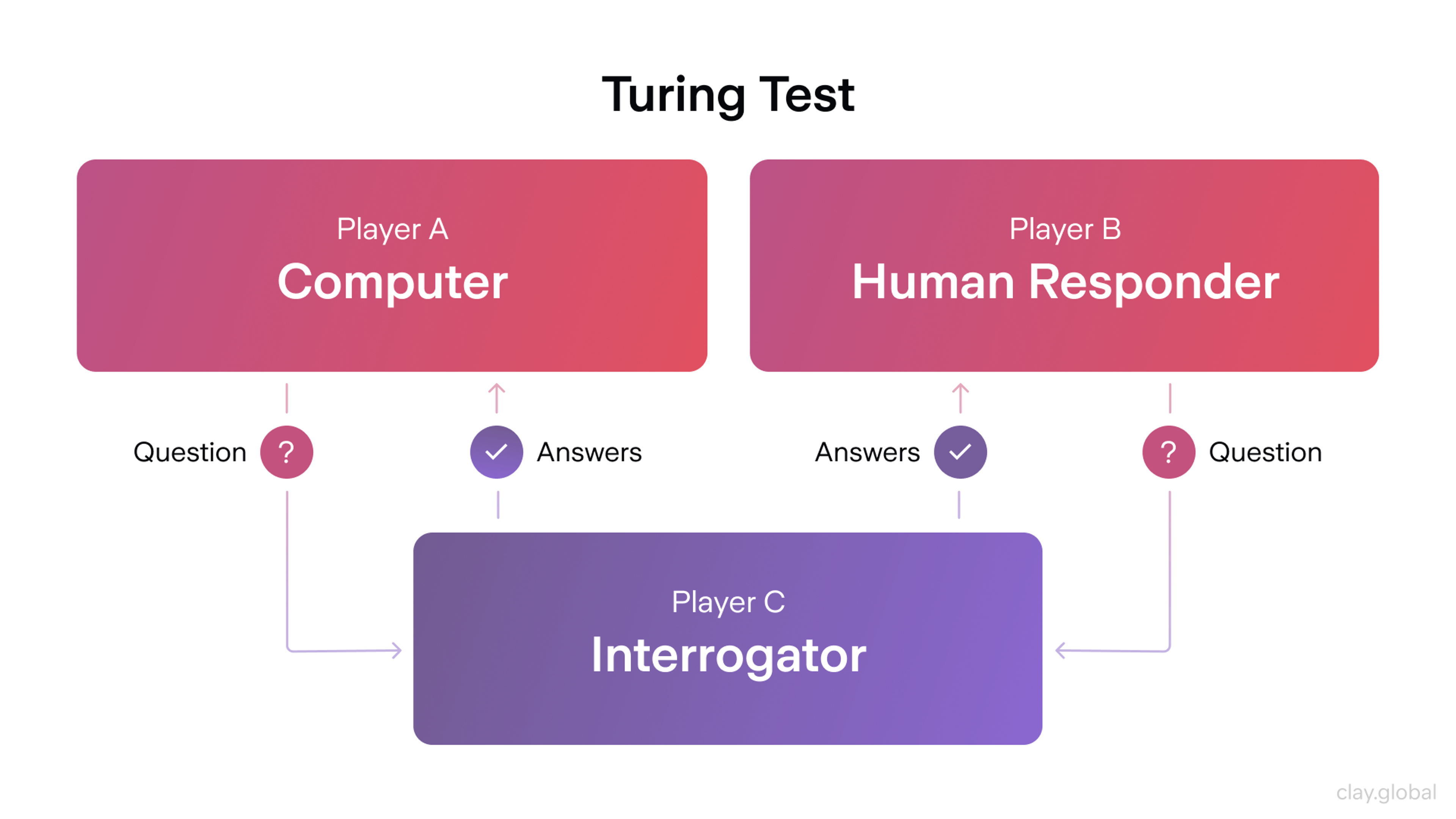

In 1950, Turing studied the idea of machine intelligence. His 1950 publication, often referred to as a seminal paper in the field of artificial intelligence, introduced the Turing Test, which he originally called the imitation game. In this test, a person talks with both a human and a machine. If the person can’t tell which is which, the machine is seen as intelligent.

The Turing Test was designed to measure computer intelligence and assess whether a machine could mimic human responses. Turing also envisioned a future where machines might surpass the capabilities of human brains.

Turing Test by Clay

Claude Shannon: The Information Pioneer

In the 1940s, Claude Shannon discovered how to turn any message into computer language. He showed that all information could be written as binary code using only ones and zeros. This idea became the foundation for all digital technology.

Shannon also proved that machines could make logical decisions using simple electrical switches. His work showed that computers could process data and make choices on their own. Shannon’s theories helped researchers understand how machines could be designed to model human cognitive processes and human cognition, bridging the gap between artificial intelligence and human thought. These ideas became an important part of how AI works today.

Shannon’s groundbreaking concepts also influenced the development of the first programming language for AI, enabling machines to perform complex reasoning and problem-solving tasks.

Norbert Wiener: The Feedback Expert

Norbert Wiener studied how systems control themselves by using feedback. For example, a thermostat turns on the heat when a room gets cold. He called this idea “cybernetics.”

Wiener believed machines could learn from their surroundings and change their actions. His ideas helped shape early robots and smart systems that react to the world around them.

Wiener, along with Turing and Shannon, didn’t build AI, but they created the ideas behind it. Their work still guides how we build intelligent machines today. Wiener's theories also inspired early efforts to create an artificial brain — machines designed to mimic human learning and adaptation.

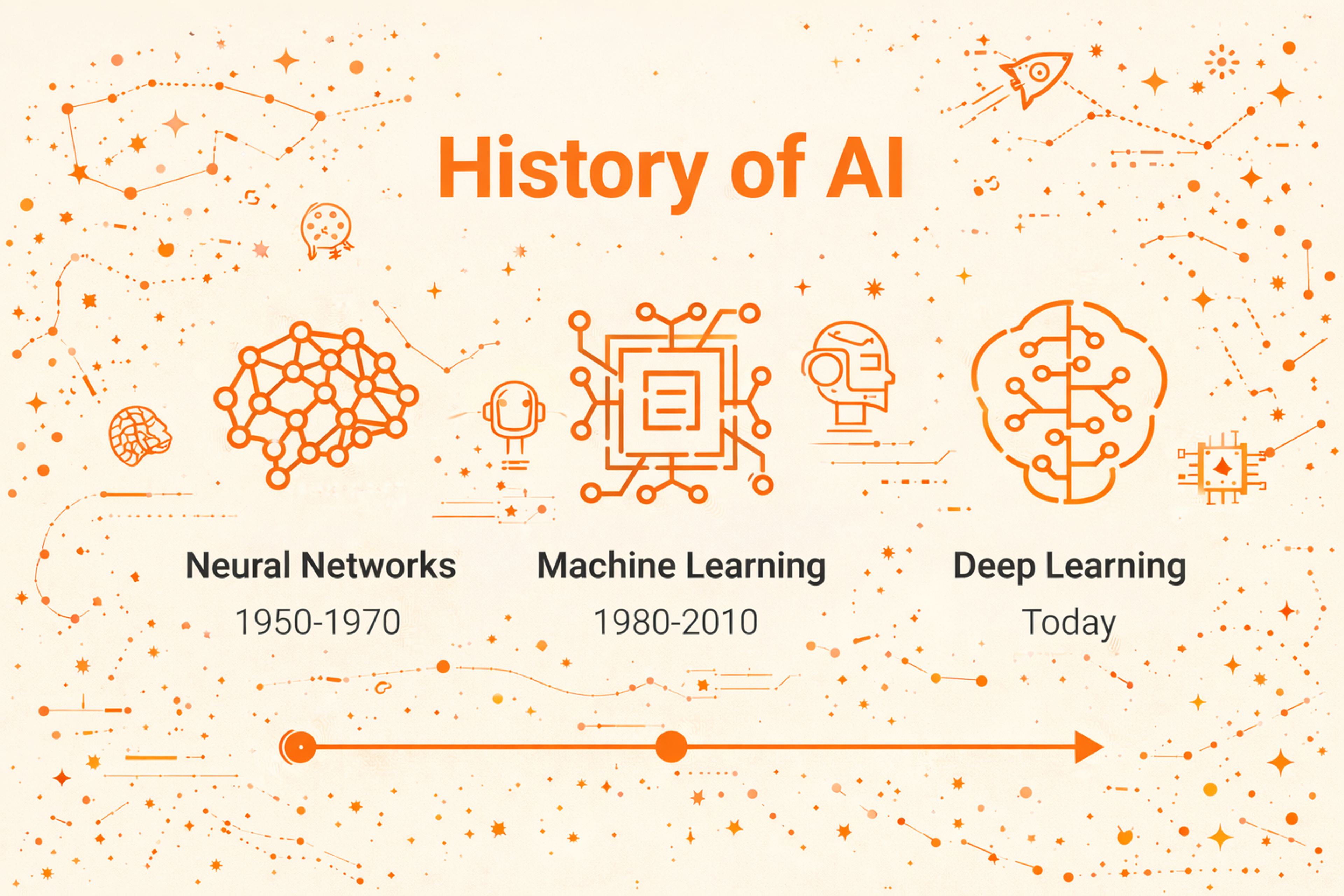

History of AI

The First Thinking Machines (1950s-1960s)

By the 1950s, scientists stopped just talking about smart machines. They started creating machines that could actually perform intelligent tasks, marking the transition from theory to practice. This period produced the first programs that could reason, solve problems, and even chat with people.

The Birth of AI (1956)

In 1956, a group of computer scientists met at Dartmouth College for a summer workshop. John McCarthy organized the event and coined the term artificial intelligence, giving this new field its name. He was joined by other top researchers, including Marvin Minsky, Allen Newell, Herbert Simon, and Claude Shannon.

Marvin Minsky, Allen Newell, Herbert Simon, and Claude Shannon at Dartmouth College

Marvin Minsky later co-founded the MIT Computer Science and AI Laboratory, a prominent AI laboratory that has played a significant role in advancing artificial intelligence research and is recognized as a leading institution in the field.

This meeting marked the official start of artificial intelligence research as a formal field. The team shared a bold idea: machines could one day think, learn, and solve problems like people do.

The First AI Programs

The Dartmouth team didn’t just share ideas — they built real programs. In 1955, Allen Newell and Herbert Simon created the Logic Theorist. It could prove math theorems using logic. Many people see it as the first true AI program. The Logic Theorist was built using early programming languages, which played a crucial role in the development of artificial intelligence.

In 1957, they built the General Problem Solver. This computer program handled different problems by breaking them into smaller steps. It had limits, but it showed that machines could reason through complex tasks.

Teaching Machines to Speak

In 1954, IBM and Georgetown University tested machine translation. Their computer translated 60 Russian sentences into English. The results were simple, but they showed that machines could begin to work with human language, marking an early step in natural language research.

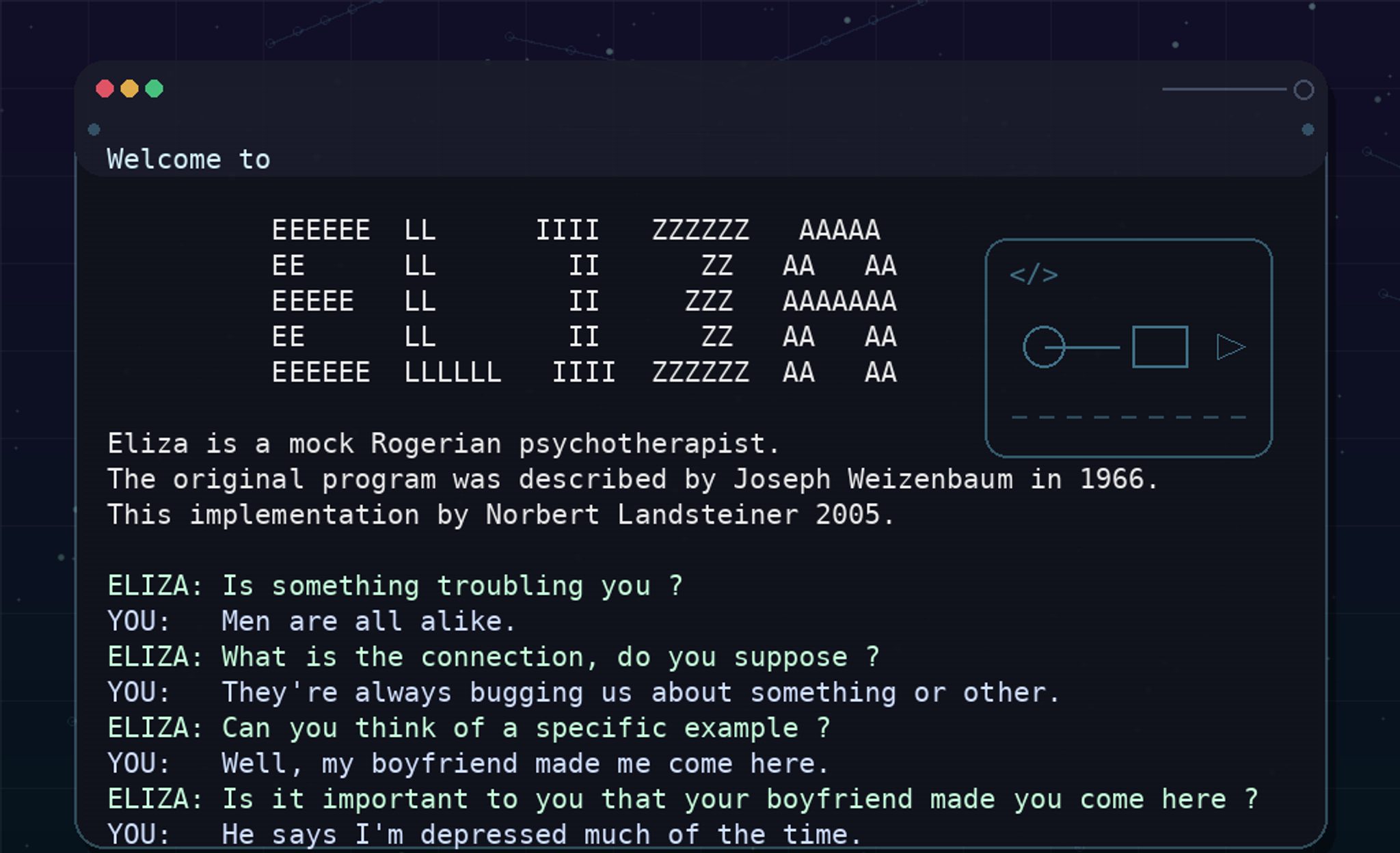

In 1966, Joseph Weizenbaum created ELIZA, one of the first chatbots. It acted like a therapist by asking questions and repeating parts of what users said. Many people thought they were talking to a real person, even though ELIZA didn’t understand the words.

ELIZA Chatbot

Around the same time, early AI programs like Daniel Bobrow's STUDENT were developed to solve algebra word problems, demonstrating the potential of natural language processing in practical applications.

These early systems couldn’t learn on their own, but they proved machines could follow human-like patterns. The scientists who built them helped shape the future of AI. The development of speech recognition during this era was another important milestone, expanding AI's ability to understand and process human communication.

The Rise and Fall of Expert Systems (1970s-1980s)

The 1970s brought excitement and big promises. AI researchers believed they were close to creating truly intelligent machines. At the time, expert systems were developed with the goal of replicating intelligent behavior in computers, aiming to mimic human decision-making and reasoning.

During this period, the international joint conference emerged as a key event in the late 1970s, facilitating collaboration and information sharing among early AI institutions. Governments and companies invested heavily in AI research. But reality proved more difficult than anyone expected.

The Expert System Boom

Scientists worked on building expert systems — programs that copied how human experts make decisions. The main function of these systems was problem solving, as they used expert knowledge to address complex challenges in specific domains. These systems stored facts and rules and used them to solve specific problems.

MYCIN helped doctors choose antibiotics for patients with infections. DENDRAL helped chemists figure out the structure of unknown molecules. These systems worked well, but only in narrow areas with clear rules.

To build them, experts had to explain their thinking step by step. This process, called knowledge engineering, took a lot of time and effort. The systems couldn’t handle new situations or learn from mistakes.

The AI Winter Begins

By the late 1970s, AI faced strong criticism. In 1973, British scientist James Lighthill wrote a report saying most AI research had little real-world value. After that, the British government cut much of its AI funding.

Philosophers like Hubert Dreyfus and John Searle also spoke out. They believed machines could never truly think or understand. They said AI researchers were chasing something impossible.

As many projects failed to meet big promises, support for AI dropped. Funding dried up, and some labs shut down or moved to other topics. This time became known as the “AI Winter,” when interest and investment in AI nearly disappeared.

The Neural Network Setback

Neural networks, which tried to copy how the human brain works, also ran into problems. In 1969, Marvin Minsky and Seymour Papert showed that simple neural networks couldn’t solve some basic tasks. Their research caused many scientists to stop working on neural network research for almost 20 years.

Even with these setbacks, the time wasn’t wasted. Expert systems showed what machines could do with careful planning. Critics helped researchers set better and more realistic goals. And while neural networks were pushed aside for a while, they would later come back and completely change the future of AI.

New Approaches to Intelligence (1980s-2000s)

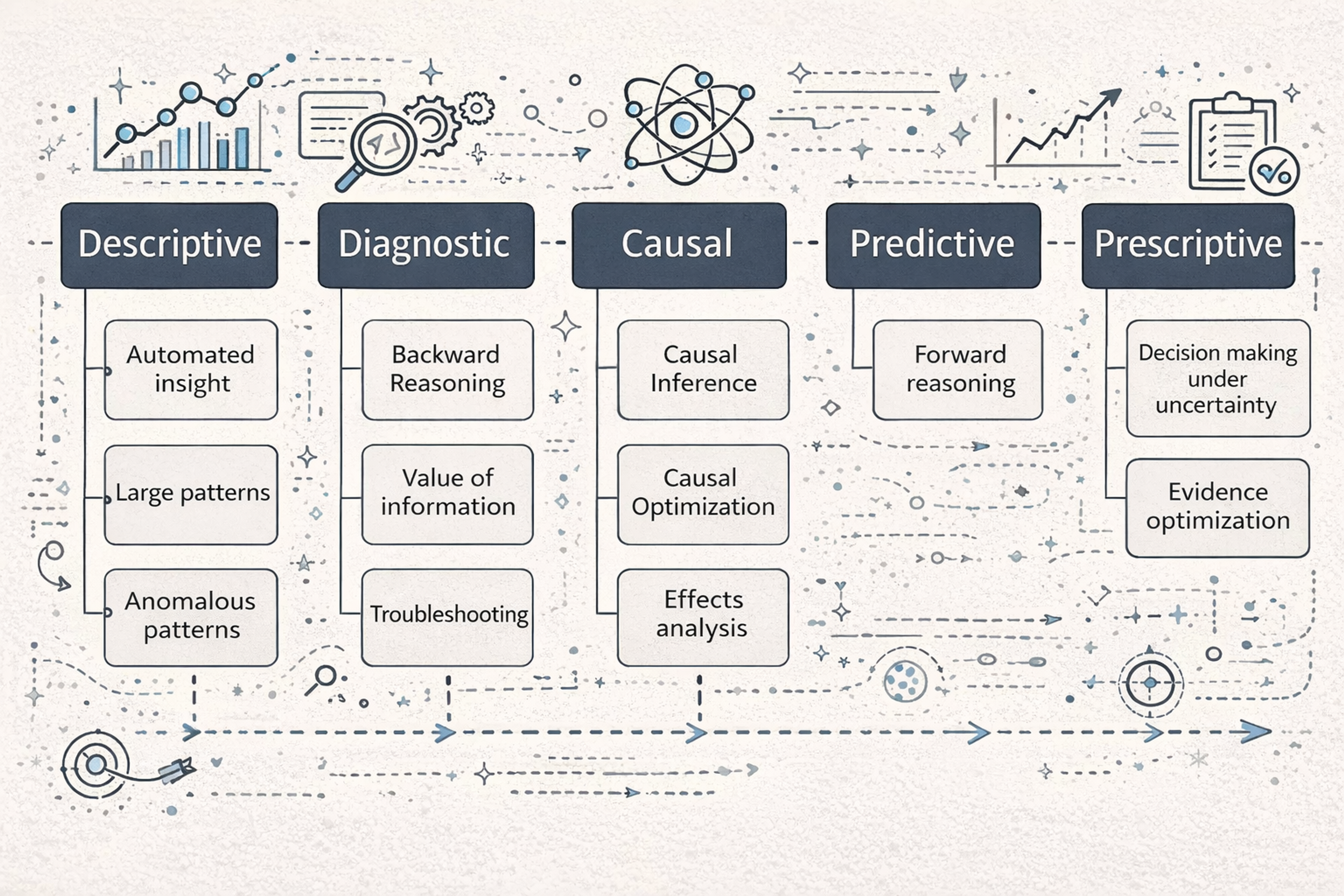

After the AI Winter, researchers looked for better ways to build intelligent systems. Instead of rigid rules, they explored methods that could handle uncertainty and learn from experience. During this period, new AI technologies emerged, representing evolving tools and approaches that enabled artificial intelligence systems to advance rapidly and find applications across text, images, and videos.

Fuzzy Logic and Probability

Lotfi Zadeh introduced fuzzy logic, which let machines handle vague ideas like "warm" or "mostly full." This helped AI deal with real-world problems, where things aren’t always clearly true or false.

Judea Pearl developed Bayesian networks. These systems used probability to help machines make smart choices. They could still reason even when the information was incomplete or unclear.

Bayesian Networks

Learning from Evolution

Some researchers looked to nature for inspiration. They created genetic algorithms that mimicked how living things evolve. These programs generated many possible solutions, tested them, and kept the best ones. Over time, they improved without specific programming.

Smarter Robots

Rodney Brooks, a scientist at MIT, changed robotics with a new idea. He believed machines didn’t need complex planning to act smart. His robots used sensors to react to their surroundings in real time.

They could move through rooms, avoid obstacles, and finish tasks without planning every step. This method, called behavior-based robotics, proved that simple actions could lead to smart behavior. It had a big impact on modern robotics and self-driving technology.

A notable milestone was the first robot sent into space to assist astronauts, and early emotionally interactive robots like Groove X's Lovot, which were designed to engage with humans on a social level. Some robots, like social robots, were also designed to recognize and simulate human emotions to improve social interaction.

The Agent Revolution

Researchers also created intelligent agents, which were software programs that could act on their own. These agents could learn from experience, make choices, and even work together to solve problems. They became the base for today’s AI assistants and recommendation tools.

These new ideas helped AI grow past simple rule-following. By using learning, adaptation, and handling uncertainty, scientists built systems that worked better in the real world.

IBM's Historic Victories (1950s-2010s)

IBM played a crucial role in AI’s development, creating systems that achieved historic milestones and captured public imagination. IBM's innovations significantly influenced the AI industry, shaping the direction of artificial intelligence research and adoption.

Deep Blue Defeats Kasparov (1997)

In 1997, IBM’s Deep Blue computer beat world chess champion Garry Kasparov. This win surprised many people. Until then, most believed only humans could handle the complexity of chess.

Deep Blue didn’t think like a person. It used powerful computers to examine millions of moves every second. Still, it proved that machines could compete with humans in complex strategy games.

Watson Wins at Jeopardy! (2011)

IBM’s Watson competed on the TV quiz show Jeopardy! against top players Ken Jennings and Brad Rutter. Watson had to understand wordplay, cultural references, and tough questions, then answer within seconds.

Watson won by a wide margin. It showed that AI could understand language and find answers quickly, much like a human. This victory proved that AI could do more than just play games or solve math problems.

TV Quiz Show Jeopardy!

Early Learning Systems

In the 1950s, Arthur Samuel at IBM built a checkers program that learned by playing against itself. It was one of the first examples of machine learning. The program got better through practice, not just code.

These early successes shaped how people saw AI. IBM showed that computers could learn, compete, and understand language — things that once seemed impossible.

The Deep Learning Revolution (2010s)

The 2010s transformed AI completely. Deep learning, a method inspired by how brains work, enabled machines to learn from vast amounts of data. Significant contributions from researchers and key breakthroughs in deep learning played a crucial role in advancing the field. This breakthrough made modern AI possible.

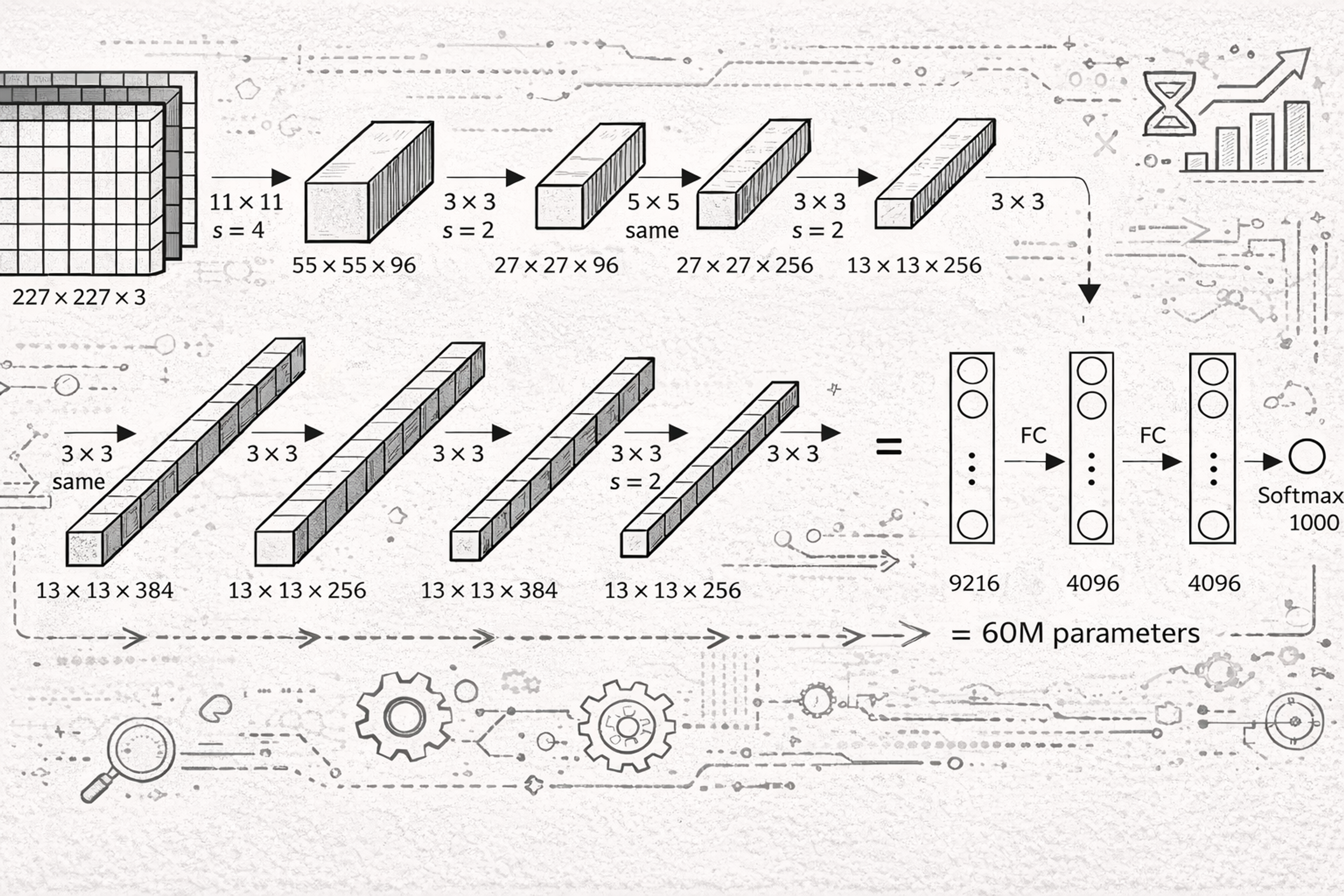

AlexNet Changes Everything (2012)

In 2012, a neural network called AlexNet won the ImageNet contest by a large margin. It excelled at image recognition, recognizing objects in photos better than any system before it. AlexNet used graphics processing units (GPUs) to train faster and work with more data.

AlexNet Used Graphics Processing Units (GPUs)

This success proved that deep learning could handle big tasks. It started a new wave of progress in computer vision, language processing, and machine learning.

Key Players and Technologies

Geoffrey Hinton, Yann LeCun, and Yoshua Bengio, known as the “Godfathers of Deep Learning,” helped develop key neural network methods. For years, many ignored their work. But it later became the base of modern AI.

In 2016, Google’s DeepMind built AlphaGo, an artificial intelligence program that beat world champion Lee Sedol at the game of Go. Go is much more complex than chess. Experts thought AI wouldn’t master it for decades. AlphaGo’s win proved that deep learning could solve very complex problems.

The Transformer Revolution

In 2017, Google researchers shared a new idea called the transformer in a paper titled Attention Is All You Need. This method changed how AI systems understand and process language. Transformers revolutionized natural language processing by greatly improving AI's ability to interpret and generate human language.

Transformers made it possible to build large language models like OpenAI’s GPT series. By 2020, GPT-3 could write essays, answer questions, and even create code. Its responses often sounded natural and human-like.

What Is GPT3?

Hardware Advances

NVIDIA’s graphics chips, called GPUs, became key tools for training deep learning models. These chips could handle the huge number of calculations that neural networks need. Google also built its own chips, called TPUs, made just for AI tasks.

Cloud platforms from Amazon, Microsoft, and Google gave people around the world access to powerful AI tools. This made it easier for researchers and companies to build and use AI. As more people joined in, progress sped up.

The deep learning revolution showed that AI could learn, improve, and solve real problems. It started the AI era we live in today.

Who Controls AI Today?

As AI becomes more powerful, questions about ownership and control become increasingly important. The answers shape how AI develops and who benefits from it.

Big Tech Dominance

Today, major tech companies control the most advanced AI systems. OpenAI made ChatGPT and GPT-4. Google’s DeepMind built AlphaGo and powerful language tools. Meta, once called Facebook, creates AI for social media and virtual reality.

Microsoft adds AI into its software, like Word and Excel. Microsoft has also integrated advanced AI into its search engine Bing, using generative models to enhance search capabilities.

These companies have the money, data, and experts needed to build the most advanced AI systems.

The Open Source Movement

Not all AI comes from big companies. Groups like Hugging Face, Anthropic, and Mistral AI share their models with the public. This open-source method lets smaller teams and researchers use powerful AI tools.

But open-source AI brings risks too. When anyone can use advanced AI, it’s harder to stop misuse. Some people worry about deepfakes, false news, and other harmful uses.

Government Involvement

Governments around the world are spending a lot on AI research and development. The United States, China, and the European Union have all launched major AI programs. These efforts help them stay ahead in technology and protect national security.

Governments are also making rules to guide how AI is used. The European Union’s AI Act sets limits on high-risk AI tools. China has rules for content made by AI. The United States is working on its own set of AI rules, too.

Ethical Challenges

AI systems can reflect or even worsen human biases. If the training data is unfair, the AI might make wrong or unfair choices. This can affect things like hiring, loans, or criminal justice. Fixing these problems means using better data and designing systems more carefully.

Many AI tools are like “black boxes.” They give answers but don’t explain how they made their choices. This lack of clarity is a big problem, especially in areas like healthcare and law.

Another concern is who controls AI. Right now, a few companies and countries lead the field. This raises questions about fairness. Will AI help everyone, or just a small group?

These are important questions. The way we handle them will shape whether AI works for all people or only a few. Emphasizing responsible development is crucial to ensure that ethical challenges in AI are addressed and that technology benefits society as a whole.

AI Today: The Age of Generative Intelligence (2020s)

The 2020s brought AI directly into everyday life. Generative AI systems can create text, images, music, and videos. These tools are changing how we work, learn, and express ourselves.

The Generative AI Boom

ChatGPT came out in late 2022 and quickly got the world’s attention. This AI can write essays, solve problems, and answer questions on many topics. It reached 100 million users faster than any technology before it.

Other generative AI tools soon followed. DALL·E and Midjourney turn text into images. GitHub Copilot helps people write code. Adobe’s AI tools make editing photos and designing easier. These tools show how AI can be creative too.

Multimodal AI Systems

The newest AI systems can work with multiple types of information simultaneously. GPT-4 can analyze images and text together. Google’s Gemini can understand text, images, audio, and video. These multimodal systems work more like humans, combining different senses to understand the world.

Artificially intelligent robots like Sophia are examples of advanced AI with human-like qualities, demonstrating how artificial intelligence technology enables robots to interact in ways similar to people.

AI Goes Mainstream

AI is no longer just for tech experts. Microsoft added AI to Word, Excel, and PowerPoint. Google built it into search and other tools. Smartphones now have AI features like smart cameras, voice assistants, virtual assistants, and translation.

This wider use of AI has both good and bad sides. More people can use it to work better and be more creative. But it also raises concerns about lost jobs, fake news, and privacy risks.

The Race for Artificial General Intelligence

Some researchers are trying to create Artificial General Intelligence, or AGI. This type of AI could match or even beat human intelligence in many areas. Companies like OpenAI, Google DeepMind, and Anthropic are putting a lot of money into this goal.

AGI could help solve big problems like disease or climate change. But it also comes with serious risks. If we don’t control AGI well, it could act in ways that harm people or go against human values.

The 2020s are a turning point for AI. These tools are getting smarter, easier to use, and part of everyday life. For example, self-driving technology has paved the way for autonomous vehicles, showing how AI can transform transportation and impact society on a larger scale. The choices we make now will shape how AI affects the future.

What Comes Next

AI development continues to accelerate, but predicting its future remains challenging. Some changes seem likely in the near term, while others may take decades or never happen at all.

The Near Future (2025-2030)

AI assistants will soon become more useful and personal. They won’t just answer questions — they’ll help plan your day, manage tasks, and support learning. These tools will understand more and hold longer, smarter conversations.

In schools, AI will bring personalized tutoring that fits each student’s style and pace. In healthcare, it will help doctors find problems faster and suggest better treatments. In law, AI will help people read contracts and understand legal steps.

Self-driving cars will appear more often, first in simple routes and safe conditions. AI will also speed up research by helping find new materials, medicines, and answers to tough problems.

The Long-Term Outlook (2030+)

Artificial General Intelligence is still a future goal. Experts disagree on when it might happen. Some think it could come in a few decades. Others believe it may never arrive. If AGI does become real, it could help us make faster scientific discoveries and solve problems we can’t handle today.

But AGI also brings serious questions. How do we make sure it stays safe? What happens to jobs if machines can do most work? How do we keep our role and purpose in a world with super-smart machines?

Some researchers worry about an “intelligence explosion,” where AI keeps making itself smarter until it passes human understanding. Others think this idea is too early or unlikely.

The Choices Ahead

AI’s future is not set in stone. The decisions we make about research, safety, and rules will guide how it grows. We need smart policies that help AI do good while reducing harm.

We must ask big questions. How do we make sure AI helps everyone, not just the rich? How do we keep control over powerful systems? How do we support new ideas without risking safety? And how do we protect human skills and purpose as AI gets smarter?

AI’s future depends not just on new technology, but on the choices we make as a society.

Conclusion

Artificial intelligence began as an idea from early thinkers. After years of research and progress, it became one of the world’s most powerful tools.

Today, AI helps doctors, drives cars, writes text, and supports billions through apps and devices. What once seemed like science fiction is now part of daily life.

But AI’s story isn’t finished. The choices we make now will shape whether it helps or harms. Its power brings both promise and responsibility.

The future of AI depends on all of us — scientists, leaders, and everyday people — working together to guide it wisely.

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more