Artificial intelligence (AI) and human intelligence come from very different roots. AI is clever design: computer programs trained to spot patterns and reduce mistakes. Human intelligence, however, is rooted in the biology of the human being — our minds evolve through physical experience, culture, feelings, and goals.

Artificial general intelligence refers to AI systems that can understand, learn, and apply knowledge across a wide range of tasks at a level comparable to a human being. Unlike current AI, which is specialized and narrow, artificial general intelligence aims to match the adaptability and broad cognitive abilities of humans, but there are fundamental differences in how each operates.

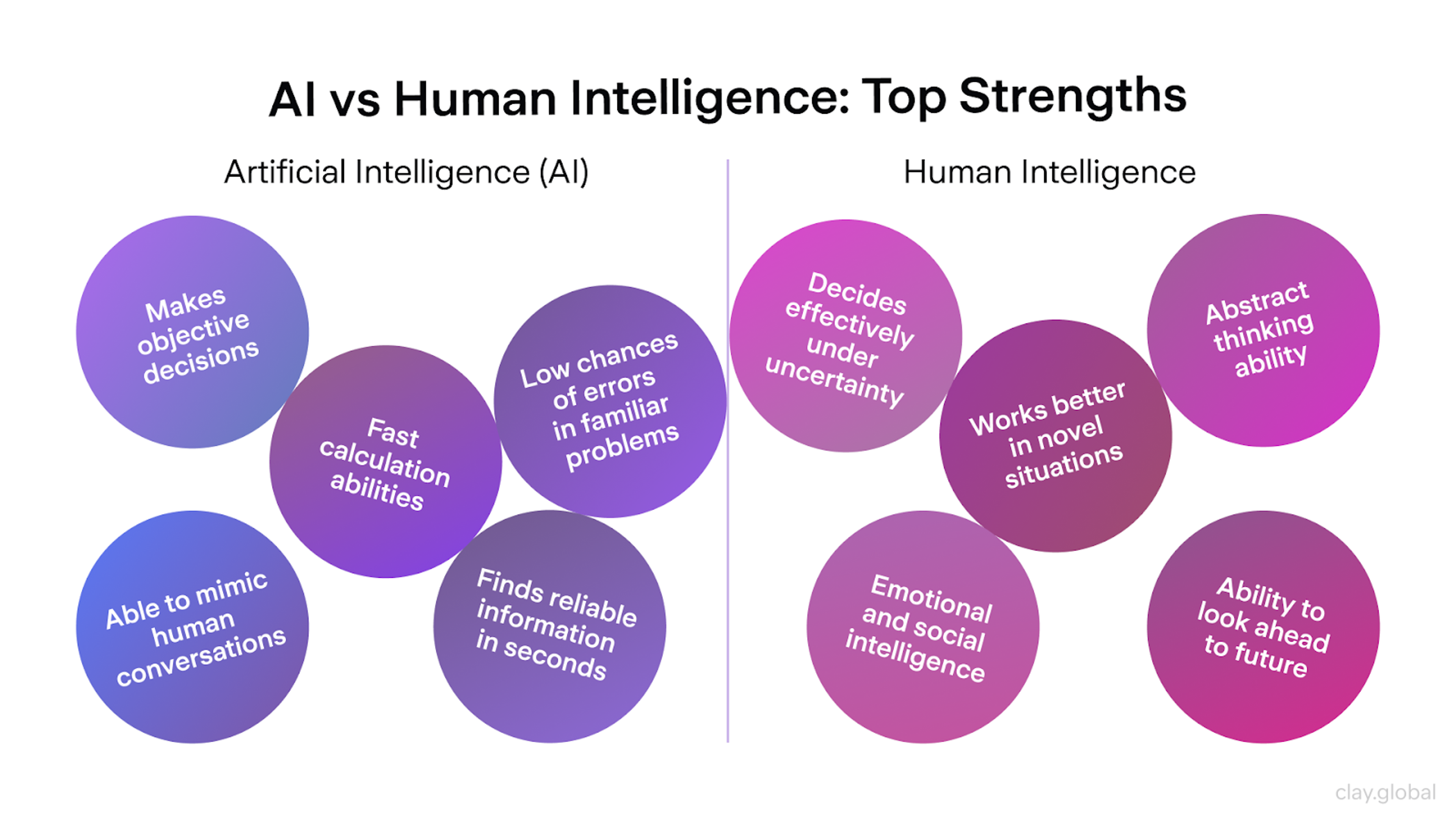

Confusing the two and thinking AI is just a slower human mind creates confusing tools. Real gains happen when each side plays to its strengths — AI offers speed and unchanging accuracy, while people provide judgment, ethics, and varied context.

This post sketches out those fundamental differences, examines key strengths side by side, and suggests a smart way to decide when to pick AI, a human, or a mix. For easy starting points, the UTHealth SBMI site has a clear chart of roles, and Maryville University offers a guide to how people shine in creativity, empathy, and flexible thinking.

AI vs Human Intelligence by Clay

What Is AI and Human Intelligence

When we talk about AI, we mean the tools we can use today: classic rules, machine learning, and deep learning, each built for a specific job. Transformer networks translate text. Vision transformers analyze photos and video.

AI models, including large language models, rely on training data and mathematical models to perform tasks such as data mining, pattern recognition, and problem-solving. Reinforcement learning refines steps in a sequence, and some systems blend neural networks, probabilistic models, and symbol-based programs.

Their primary skill is recognizing patterns, adjusting them, and doing it all over large datasets, faster and more consistently than any human can. While these systems are powerful, they are still considered narrow AI, and the broader goal of achieving general AI — systems with human-level intelligence and adaptability — remains a challenge.

When we talk about human intelligence, we mean a kind of adaptable thinking that grows from being in a body and among other people. It combines seeing, moving, remembering, talking, feeling, and deciding.

Human cognition, cognitive abilities, and learning ability enable humans to adapt, solve problems creatively, and learn quickly from just a few examples. Because of this, humans can carry knowledge into new situations and judge options using shared norms and personal values.

Researchers, including Korteling and colleagues, argue that humans and machines are complementary, not copying. Their work compares biological and artificial intelligence, and it helps decide whether a human or an AI should make a choice.

How Learning Differs

AI learning relies on patterns. It feeds on vast datasets, tweaks parameters to lower error rates, and guesses beyond its training set. Tools like BERT popularized a two-step approach of training on a broad dataset and then fine-tuning on specific tasks — one training, many adaptations. The key difference is that AI typically requires multishot learning with many examples, while humans can often learn from just a few.

In contrast, human learning is grounded in the body and embedded in social contexts. We form ideas by moving, talking, and feeling in a cultural setting or with explicit instruction. Our unique cognitive capacities allow us to learn from just a few examples and readily adjust goals or redefine problems. Thanks to metacognition — thinking about our thinking — we can review our methods, spot weaknesses, and change our approach.

Quick Tip: AI can scale faster than any team when there’s lots of precise, labeled data. Humans still lead if data dries up, goals shift, or examples run thin, especially in problem solving where adaptability and creativity are crucial. The most innovative systems blend both: machines spot and tidy up patterns, while people read, rethink, and make choices.

Embodiment and Situated Cognition

Smarts aren’t only about math. They grow from being in the world. Both AI and humans interact with their environment, but humans adapt more deeply, drawing on context and experience in ways AI cannot.

Embodied AI — robots packed with sensors and motors — prove that ability grows through constant back-and-forth with the world: they learn to balance, lift, and find their way.

Yet, even the best-feeling robots miss the long history, rich mix of sights, sounds, body cues, and social hints humans soak up. Humans are social creatures whose social intelligence is developed through rich social interactions, something AI cannot fully replicate. We “off-load” thinking into the tools we grab, the rooms we work in, and the people we turn to.

Why It Matters: When jobs call for careful hand skills, deep social feel, or reading the room — think surgery, caregiving, negotiating, or teaching — mixing deep robot skill with human leadership and trust gives the strongest results.

Cognitive Comparison

Perception. AI can blend different data types — like thermal and hyperspectral signals — across large volumes. It “sees” things we can’t. Conversely, humans tie what we sense to our goals, beliefs, and general knowledge. This section compares the cognitive functions of AI and humans, highlighting their unique strengths and limitations.

We turn raw data into meaning. Best practice: let AI handle triage, isolate anomalies, and suggest priorities. Assign humans to messy, high-stakes situations where the meaning isn’t clear.

Attention. AI focuses on a defined task without fatigue. Humans can shift gears and redirect focus when new priorities arise. Combine 24/7 AI surveillance with human judgment to readjust priorities based on emerging context.

Memory. AI stores an enormous amount of data and retrieves it exactly. Our memory is more about reconstructing stories based on context — it’s less accurate but more relatable. A key limitation in human recall is working memory, which restricts how much information we can process and retain at once. Use AI for fast data recall and summary. Use humans to draw connections, make value-based judgments, and settle on decisions.

Language. AI can generate fluent text and concise summaries. However, AI faces significant challenges in understanding human language, especially its nuances, context, and emotional depth. Humans, however, carry nuance — like sarcasm, background undercurrents, and hidden intentions — that AI misses. Abstract thinking is a human strength, allowing us to interpret symbolism and complex ideas that AI struggles to replicate.

Typical Failure Modes

AI Failure Modes

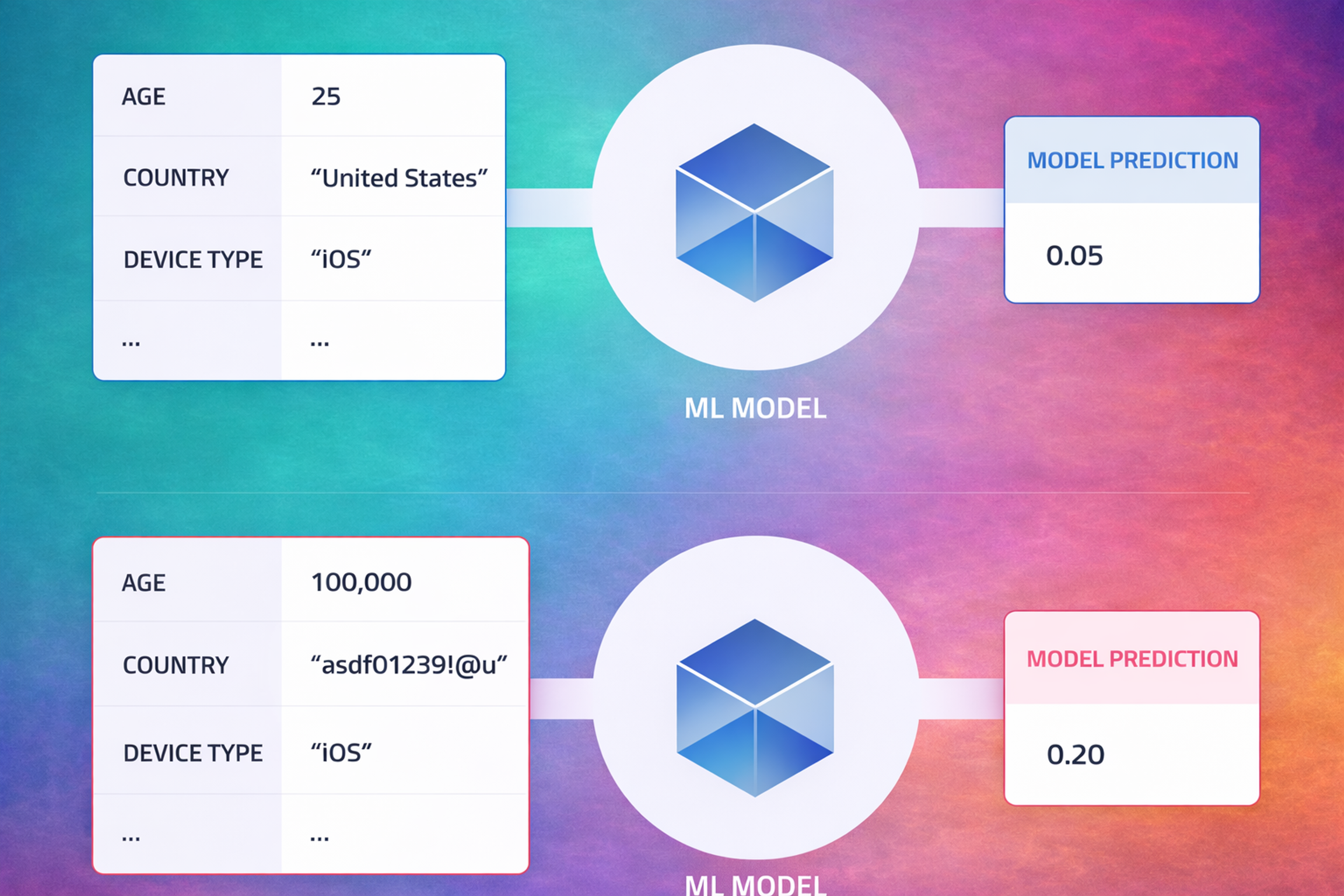

AI systems can break when conditions shift away from what they trained on, when bias from datasets leaks into outputs, when they generate confident but wrong information, or when performance slips quietly as conditions change. Trust in AI should be built on rigorous verification, stress testing, and careful calibration — not just on convincing explanations the AI can generate.

AI Failure Mode

Human Failure Modes

Humans get tired, make inconsistent choices, and fall for biases like only considering the first number we see (anchoring), only recent examples (availability), or what the group agrees on (groupthink). Teams can settle for what’s easy, drift into groupthink, let documentation go stale, and allow shared but unverified beliefs to harden into dangerous assumptions.

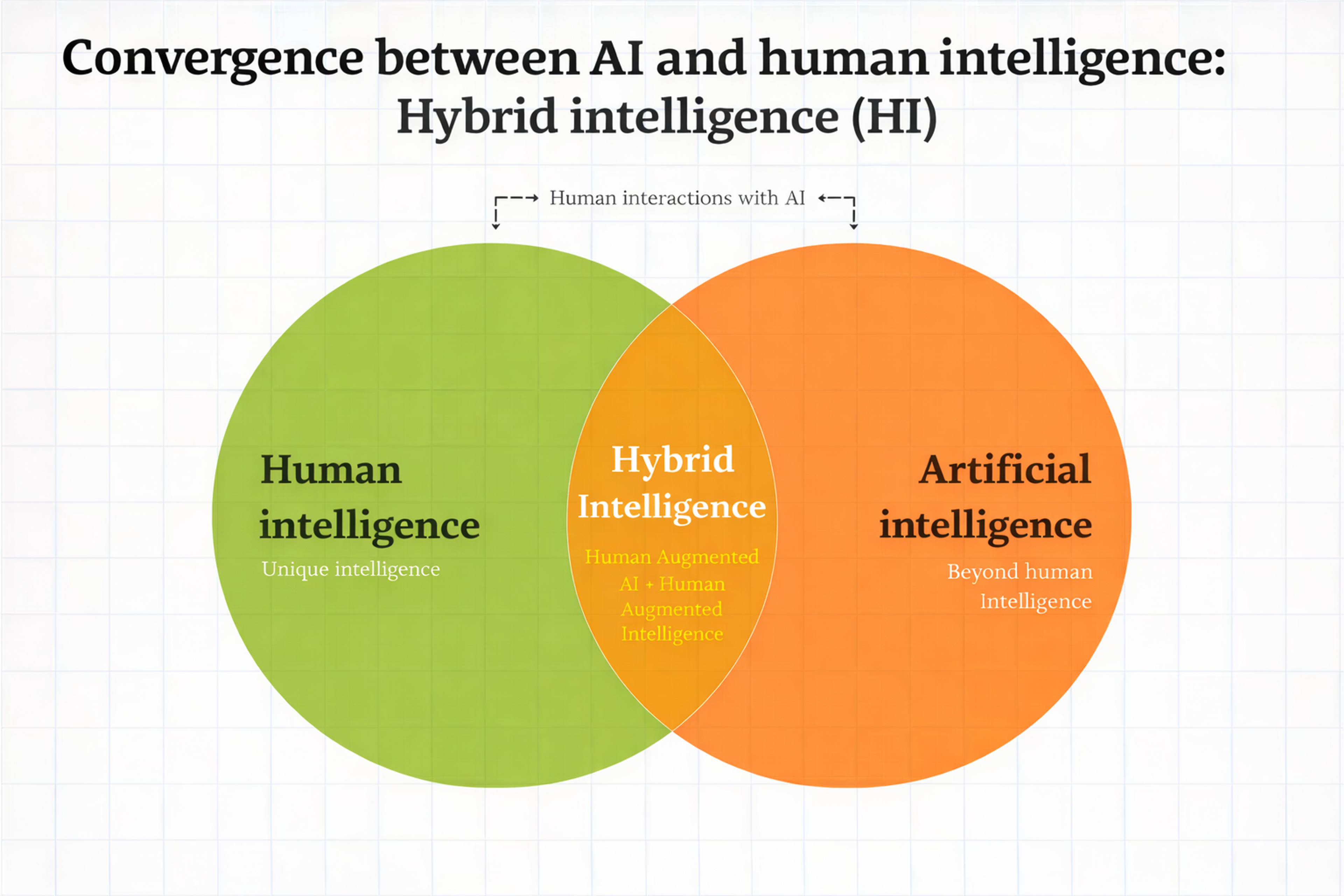

Why Hybrid Helps

AI can handle tasks that require more attention than any person can give, while humans bring the real-world sense that AIs lack. The craft lies in designing the interface: deciding who makes which choice, when someone steps in to check work, and how to pass tasks between AI and people smoothly.

Lessons from Healthcare

The health care sector sharpens the discussion, showing both the enormous potential of AI applications and why we must remain careful.

Positive signal (screening): A study across several countries assessed an artificial intelligence tool for breast cancer screening. The results showed that false positives were lowered by 5.7% in the USA and 1.2% in the UK. It also cut false negatives by 9.4% and 2.7% in the same regions. These numbers suggest the AI application can help doctors when extensively tested.

Mixed/negative signal (triage and workflow): Some newer AI applications that prioritize cases for doctors have found no boost — or even a drop — in diagnostic accuracy and wait times when the AI is added to current systems. These results highlight that AI must be woven thoughtfully into the workflow and continuously monitored to be effective.

Cautionary tale (overclaiming): MD Anderson stopped a prominent cancer-care partnership with IBM Watson. IBM later sold Watson Health’s analytics unit to Francisco Partners, who now markets it as Merative. This episode teaches a clear design lesson: create support tools that are thoroughly validated and that let doctors in the loop, rather than trying to replace the doctor’s judgment.

Safety and Governance

Two frameworks can help create trust in AI systems:

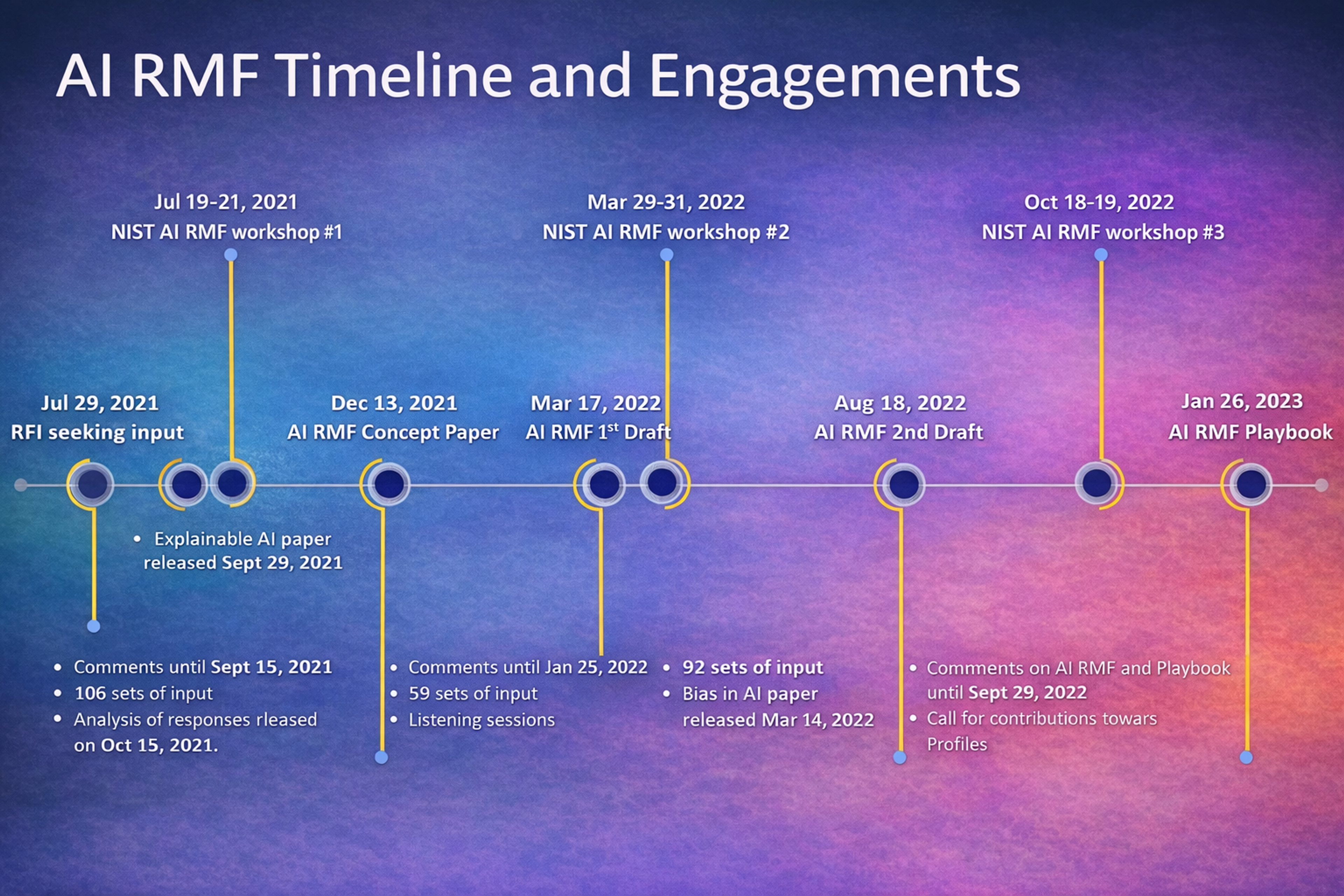

- NIST AI Risk Management Framework:This voluntary U.S. guide stresses finding, measuring, and managing risks, ongoing oversight, and precise documentation on data and decisions. It also highlights the importance of integrating ethical considerations into AI governance to address potential biases and societal impacts.

AI RMF Timeline

- EU AI Act (Article 14, in force Aug 1, 2024) — This new European law requires rigorous human oversight of high-risk AI tools and mandates that these systems be audit-ready and robust, with a strong focus on protecting human life in sensitive applications.

The Act takes effect on August 1, 2024, rolling out in phases. General-purpose AI rules kick in on August 2, 2025, while high-risk criteria are activated over the next few years. Even if you’re outside the EU, these deadlines serve as solid design targets.

The main takeaway is to embed regulatory oversight as a core design feature. Create approval gates, clear escalation paths, contingency fallback modes, and ongoing drift monitoring from the very first prototype (refer to the decision matrix below). When well-governed, AI can not only ensure safety but also increase productivity across organizations.

Consciousness and Understanding

Processing is not the same as understanding. Searle’s Chinese Room warns that symbol manipulation does not equal comprehension. While the argument has its critics, for engineers, it checks the impulse to over-anthropomorphize the system.

The Global (Neuronal) Workspace theory suggests that conscious access spreads when a piece of information lights up a vast network, not that the current models are conscious. Current AI systems lack self awareness — they do not possess an understanding of their own states or actions. Practical takeaway: assume present systems mimic speech about feelings and beliefs but lack subjective awareness. Accountability lies with users and designers, not the machine.

In summary, there are two forms of intelligence — human and artificial — each with different approaches to understanding and consciousness.

Real-world Domains

Healthcare

Use AI to speed up image assessment, summarize findings, and highlight potential risks. Clinicians still own the ultimate diagnosis, patient consent, risk discussion, and decision-making. Keep the tools under regular review and adjust them to the specific clinical setting. The evidence so far is mixed.

AI in Healthcare

Finance and Operations

AI tracks transaction flows, predicts demand, spots unusual patterns, and ranks review cases. Humans still define risk appetite and investigate outliers.

Recent anti-money-laundering results include sharp drops in alert volumes and higher confirmation rates — HSBC’s pilot cut alerts by 60% while boosting true positives by 2 to 4 times using Google Cloud’s AML platform.

A vendor study showed a 33% drop in false positives at one retail bank. Success hinges on dataset quality, governance, and system integration.

Education and Knowledge Work

AI can customize practice and progress. Teachers guide motivation, social skills, and higher-level learning. In one extensive field test, generative AI raised customer support agent productivity by around 14%, helping especially those with less experience. Initial AI tutoring studies show faster learning with the same or less effort, but designs and environments differ — view results as hopeful and keep evaluating.

Creative work

Let models generate a broad range of drafts, options, and styles while humans define purpose, voice, and quality. Always credit the person who sets the vision and makes final calls. Reveal AI’s role when it’s relevant to transparency.

Advanced Mechanisms

Automate Bounded Steps

Begin by automating narrow, well-understood tasks. Those tasks should have clear limits and be backed by rigorous testing. Keep humans in control whenever the choice could lead to severe consequences or when the answer is uncertain.

Set up formal drills for handing off tasks between AI and human operators. Record every decision, and schedule regular practice sessions to simulate failures and rehearse the responses.

“Intuition-like” Behavior

Symbolic reasoning gives you clear rules and provable guarantees. Probabilistic models handle uncertainty with formal rigor. Neural networks recognize patterns in messy, high-dimensional data.

Combine these strengths: let rules filter for compliance, then use learned models to handle the messy edge cases. “Intuition-like” behavior from models is just rapid pattern matching. It can be helpful for fast responses, but always keep detailed logs and run historical backtests to confirm reliability.

Meta-reasoning

Today, meta-reasoning mainly means watching how well the system performs, how uncertain it is, and how many resources it uses. Based on that data, the system can switch strategies — like preferring retrieval of past cases over generating new text or asking for more data when uncertainty is high. Treat these switches as clear, instrumentable patterns you can tune and measure.

Pragmatic Decision Framework: AI vs. Human vs. Hybrid

When a decision needs to be made, use these five signals to decide how the responsibility should be shared, then apply clear guardrails. By combining human and artificial intelligence, organizations can solve complex problems more effectively, leveraging the unique strengths of each.

AI vs. Human vs. Hybrid

- Task Ambiguity. If the goals or constraints keep changing, keep a human in the lead and let the AI provide retrieval and suggestions. If the task is well-defined and stable, you can start with the AI driving while the human checks.

- Risk level & detectability. When errors can harm people, damage reputation, or hide from standard checks, mandate human signoff and ensure you can trace, explain, and replay decisions. This aligns with NIST RMF and EU Article 14.

- Data stability & availability. When data is plentiful and stable, you can lean on automation. When data is thin, changing, or noisy, let people decide. Recheck this every so often; data drift is real.

- Explainability & acceptance. If people must see why decisions are made — like in healthcare, lending, or hiring — pick a hybrid setup. Log every decision, use tools that work with any model, and let users override if needed.

- Regulatory exposure. In regulated fields, structure everything for auditing: data lineage, ongoing monitoring, human checks, and a way to roll back. Watch for the EU AI Act and its tiered rollout.

- Implementation pattern. Begin with assistive tools; then shift to gated automation only when the AI model consistently outperforms trained humans and stays well-calibrated. After that, monitoring automation will be used with active drift checks, predefined response plans, and clear human decision rights.

Human capabilities, such as creativity, emotional intelligence, and critical thinking, remain essential in decision-making, especially in complex or ambiguous situations. By integrating both human and artificial intelligence, you unlock limitless possibilities for innovation and effective problem-solving.

FAQ

Can AI Beat Human Intelligence?

AI can outperform humans in specific tasks like data analysis, pattern recognition, and speed. However, it does not match human intelligence in creativity, emotional understanding, ethics, and general reasoning.

Which 3 Jobs Will Survive AI?

Jobs that rely on creativity, emotional intelligence, and complex human interaction are most likely to survive AI. Examples include healthcare professionals, creative roles (writers, designers), and leadership positions.

Is It Possible For AI To Destroy Humans?

No, AI by itself cannot destroy humans. AI systems act based on human programming and data. The risk comes from misuse or lack of regulation, not from AI having independent destructive intent.

What Is The IQ Level Of AI?

AI does not have a traditional IQ because it lacks human-like intelligence. Some studies equate narrow AI performance to IQ ranges, but AI is better measured by task-specific benchmarks, not general intelligence.

How Long Until AI Takes Over?

AI is not expected to "take over" in the sense of replacing humans entirely. Instead, it will continue to augment jobs and automate repetitive tasks. Experts predict widespread AI integration across industries within the next decade, but always under human oversight.

Read more:

Conclusion

Ultimately, AI and human intelligence aren’t in competition, They are different instruments in the same toolkit. The goal is to design so they work together: let algorithms manage large-scale, uniform tasks and leave the weighing of values and accountable decisions to people. Think of governance as engineering: set up verification, calibration, ongoing monitoring, and a straightforward human override when needed.

Roll out automation in graduated steps. Begin with tools that assist; once stable, introduce gated automation, where human checks remain. Move next to monitored automation, which operates only when the evidence outperforms human performance.

The final decision must stay with a person whenever the consequences are severe. Teams that follow this path ship features more quickly, learn from failures without catastrophic loss, and produce results that deserve championing.

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more