Ever wonder how Netflix knows what you’ll watch next? Or how your phone knows your face? That’s artificial intelligence and machine learning, including various AI solutions. Artificial intelligence and machine learning are closely connected but not identical, and are often closely related in practice.

Millions use AI every day but don’t know how it works. It feels like a black box — confusing and mysterious in the context of intelligence and machine learning.

This article breaks it down. You’ll learn how AI uses data, algorithms, and machine learning to make smart choices.

What Is Artificial Intelligence?

AI means teaching machines to mimic human intelligence and learn like humans. Machine learning is one part of AI that learns from data and helps to reduce human error. Machine learning artificial intelligence systems are designed to perform specific tasks that typically require human reasoning.

Together, they help automate tasks, make smart decisions, and solve real problems, leading to increased operational efficiency.

What Is Artificial Intelligence by Clay

Machine Learning vs Deep Learning

Machine learning forms AI’s learning engine. Instead of following rigid, pre-programmed rules, machine learning systems discover patterns in data and improve their performance automatically. Think of it as AI and ML's way of getting smarter through experience, where machine learning enables computer systems to identify patterns using ML tools and perform tasks that mimic human reasoning.

Deep learning represents a specialized type of machine learning. Inspired by how the human brain processes information, deep learning uses artificial neural networks to analyze massive datasets and extract complex patterns that simpler methods might miss. Deep learning and other techniques enable computer systems to perform complex tasks such as pattern recognition and data analysis.

Here’s a simple way to understand the relationship with machine learning models:

- AI is the ultimate goal — creating intelligent machines

- Machine learning is the method — how AI systems improve through data analysis

- Algorithms are the tools — step-by-step instructions that guide the learning process

- AI and machine learning enable computer systems to perform tasks and identify patterns in data

AI uses other techniques beyond machine learning and various AI tools to solve a wide range of problems.

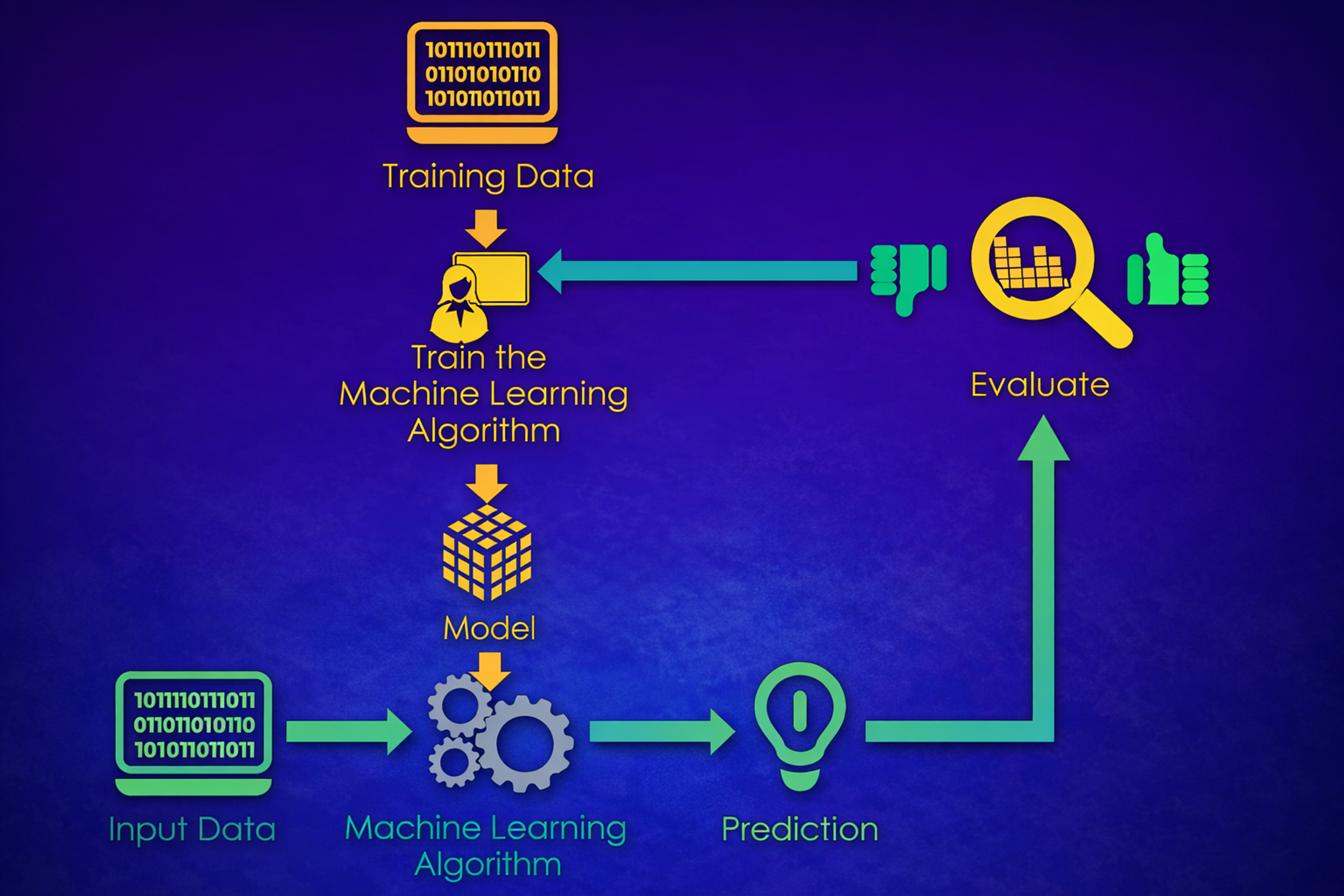

How AI Systems Learn from Data

AI needs three things to work: data input, data processing, algorithms, and training.

Data is the fuel. The more accurate and diverse the data, the better AI learns. Thousands of labeled examples help it spot patterns. Data science plays a crucial role in preparing and analyzing data for AI systems.

Algorithms are the instructions. They tell AI how to study the data and learn from it. Statistical models are often used to analyze data and identify patterns during the learning process. Unlike fixed rules, these instructions change as AI improves.

Training is the learning process. AI looks at examples, makes guesses, gets feedback, and gets better — just like people do.

Sometimes AI memorizes too much (overfitting) or learns too little (underfitting). Tools like TensorFlow and PyTorch help fix these problems and build smarter systems.

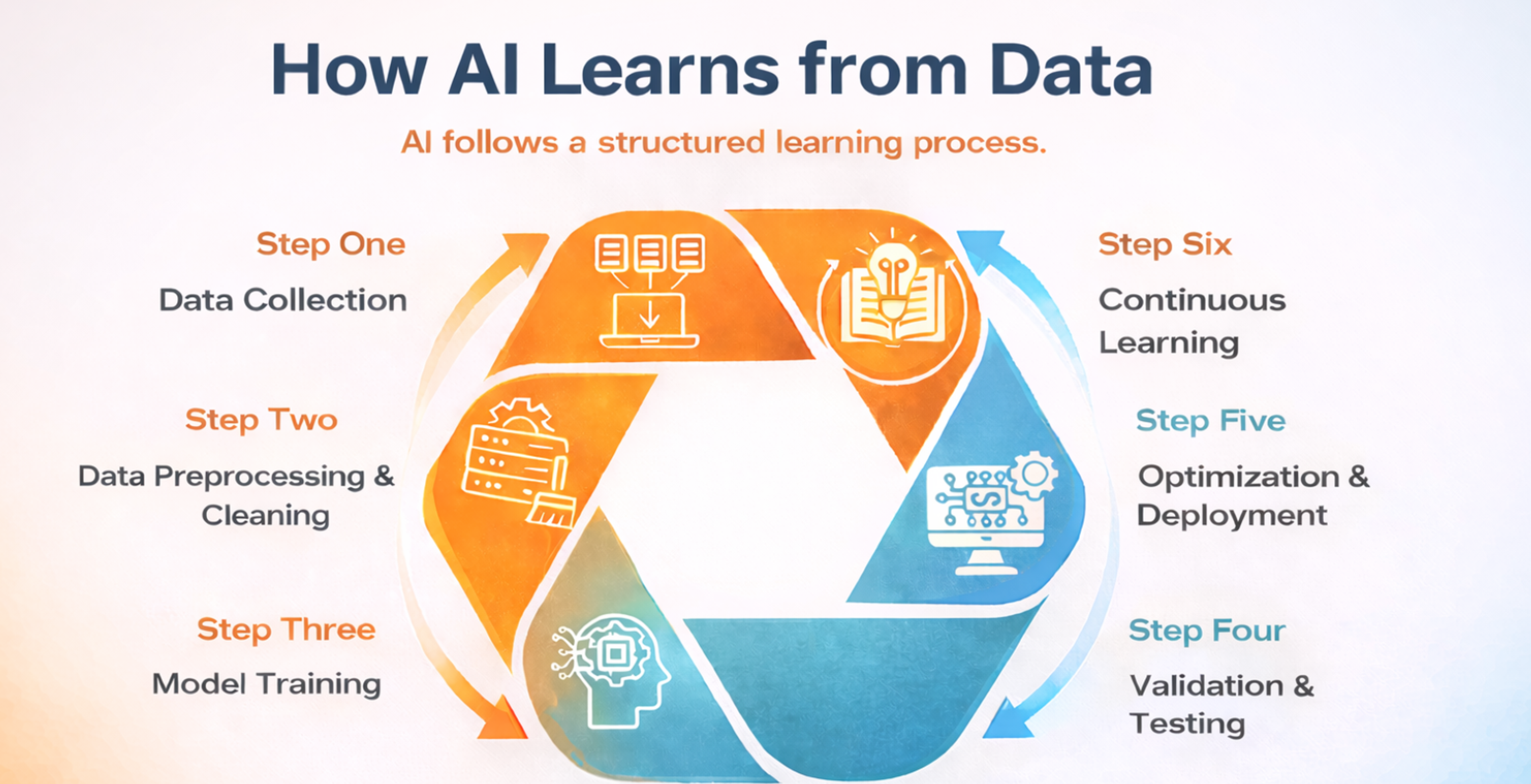

How AI Learns from Data

Getting Started with AI

For individuals and businesses interested in AI adoption, several pathways offer accessible entry points.

- Programming languages commonly used in AI include Python for its extensive libraries, R for statistical analysis, and JavaScript for web-based AI applications. Python's simplicity makes it particularly popular among newcomers.

- AutoML platforms like Google's AutoML and Microsoft's Azure Machine Learning Studio enable non-technical users to build AI models through visual interfaces, reducing the need for deep programming expertise.

- Online education provides structured learning paths. Andrew Ng's Coursera courses and university programs offer comprehensive AI education from beginner to advanced levels.

- Small business adoption often starts with existing AI services — chatbots for customer service, recommendation systems for e-commerce, or automated data analysis for business insights.

The key to successful AI implementation lies in starting small, focusing on specific problems, and gradually building expertise and confidence.

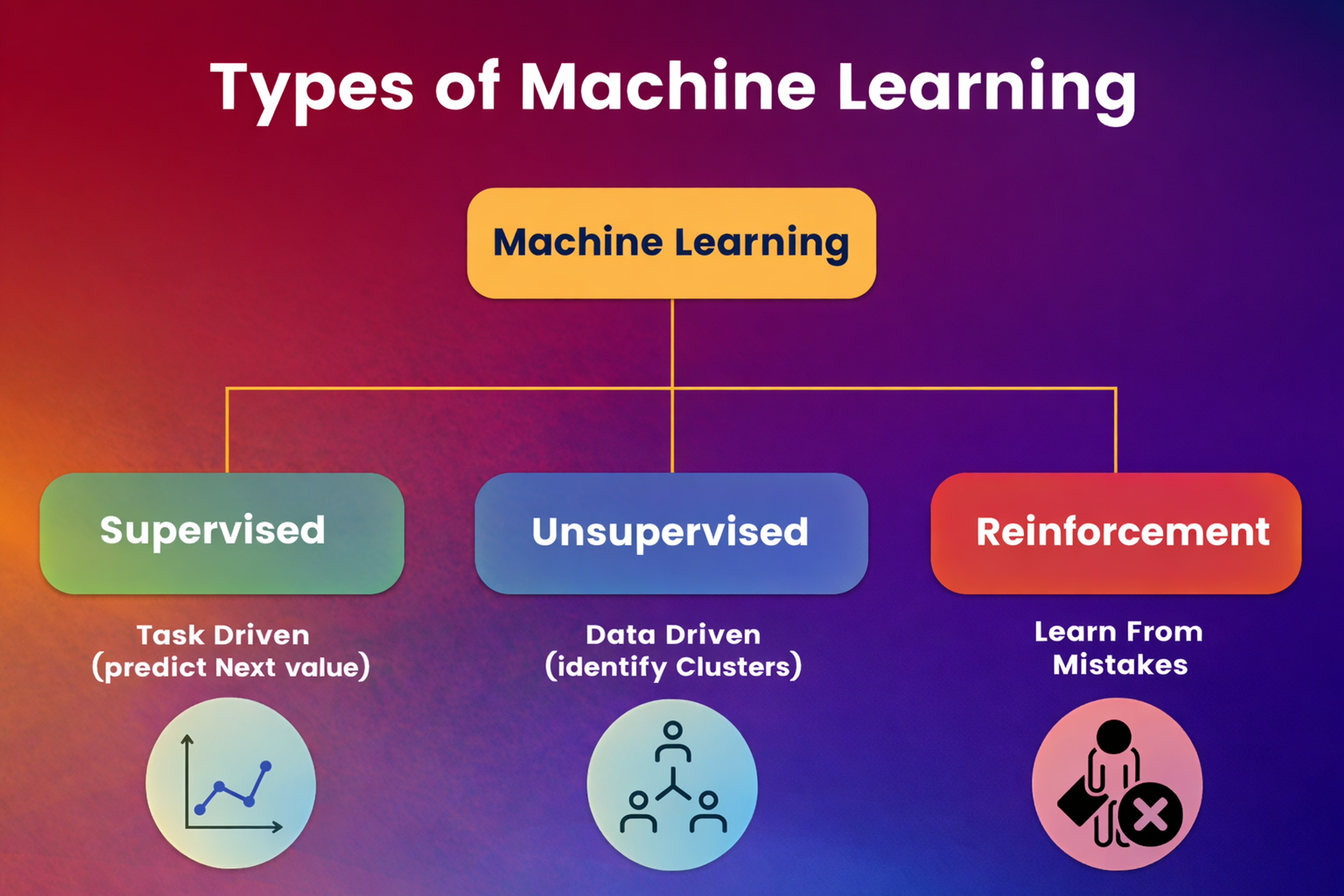

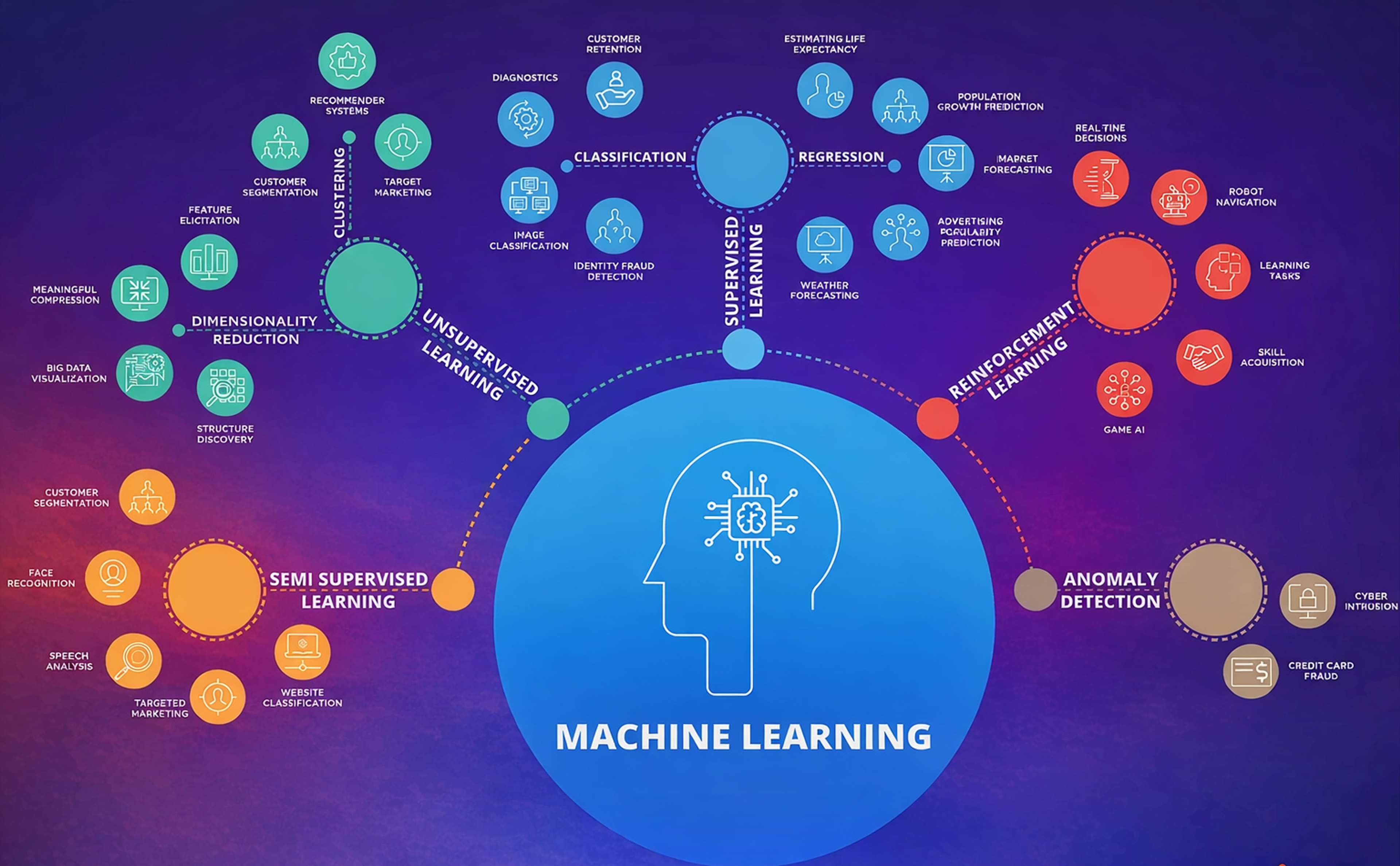

Types of Machine Learning

Machine learning approaches fall into three main categories, each suited for different types of business challenges.

Supervised learning works with labeled data — information that already includes the correct answers. Imagine training an email spam filter. You’d provide thousands of emails already marked as “spam” or “not spam.”

The system studies these examples, learns to identify spam characteristics, and applies this knowledge to new, unlabeled emails. Supervised learning is also widely used in fraud detection, where algorithms identify patterns of suspicious activity to help prevent fraudulent transactions.

Unsupervised learning tackles problems without predetermined answers. The system must discover hidden patterns independently, using pattern recognition to identify patterns in unlabeled data. Netflix’s recommendation engines use this approach to group viewers with similar preferences, even though no one explicitly told it how to categorize people’s tastes.

Reinforcement learning operates through trial and error, much like how children learn to ride bicycles. The system tries different actions, receives rewards for good choices and penalties for poor ones, then adjusts its behavior accordingly. This approach powers game-playing AI like AlphaGo and helps robots learn complex tasks in physical environments.

Types of ML

Machine learning is just one approach to building AI systems; other techniques are also used to solve complex problems.

The Machine Learning Process

Building AI isn't just about choosing the right algorithm or having powerful computers. It's a structured process that turns raw data into smart systems. Companies like Netflix use these pipelines to recommend movies you'll love, while Tesla uses them to power Autopilot systems. Each step matters — skip any of them, and the whole system gets weaker.

Step 1: Data Collection and Preparation

Good AI needs good data. Whether you're building medical diagnosis tools, fraud detection systems, or language translators, your system is only as strong as the data you feed it, in contrast to manual processes.

Raw data comes from many places: server logs, databases, APIs, sensors, or user clicks. But this data is usually messy, incomplete, or inconsistent. Data scientists spend most of their time cleaning it up.

For big data projects, teams often use Apache Spark or Hadoop to process massive datasets. These tools help handle data that's too large for a single computer.

The preparation work includes:

- Data Cleaning: Fixing missing values and removing errors

- Normalization: Scaling features so they're in the same range

- Encoding: Converting text and categories into numbers that the computer can understand

- Tokenization: Breaking sentences into words and turning them into vectors for language models

This phase often includes feature engineering. Teams create new variables from existing data to help models learn better. For example, combining "order date" and "delivery date" creates a "delivery delay" feature that's more useful than either alone.

This work isn't glamorous, but it's the foundation. Models trained on bad data don't just perform poorly — they can produce harmful or misleading results.

Step 2: Algorithm Selection

Different problems need different mathematical tools. Choosing the right algorithm means finding the best match for your problem, data type, and business needs.

For traditional machine learning tasks, scikit-learn offers reliable algorithms like Random Forest and linear regression. For more complex problems involving images or text, deep learning frameworks like TensorFlow and PyTorch provide powerful tools.

Key decisions include:

- Interpretability: Do stakeholders need to understand how the model works? Simpler models like decision trees are easier to explain than complex neural networks.

- Data Complexity: For high-dimensional data like images or natural language, deep learning models like CNNs or Transformers work better.

- Training Resources: Complex models need more computing power and data. For quick experiments or smaller teams, lightweight algorithms like XGBoost might be better starting points.

Teams rarely find the perfect answer on the first try. Most test multiple algorithms in parallel and compare them using metrics like accuracy or error rates.

Machine Learning Algorithms

Step 3: Model Training and Optimization

Training is where machine learning happens. The model learns from data by spotting patterns and adjusting its internal settings to make better predictions.

Here's what happens during training:

1.

The model makes predictions on training data2.

It compares those predictions to correct answers3.

It calculates how wrong it was using a loss function4.

It adjusts its parameters using optimization algorithms like gradient descent

This cycle repeats thousands of times, letting the model improve gradually.

Frameworks like TensorFlow and PyTorch make this process easier by handling the complex math automatically. For tracking experiments, tools like MLflow and Weights & Biases help teams compare different approaches and settings.

Training deep learning models can take hours or days and often needs specialized hardware like GPUs. But even smaller models benefit from careful training techniques:

- Hyperparameter Tuning: Adjusting settings like learning rate and batch size

- Regularization: Techniques like dropout and batch normalization prevent overfitting

- Advanced Architectures: LSTMs for time series data, Transformers for language tasks

Good training creates models that work well on training data but also generalize to new, unseen data.

Step 4: Validation and Testing

Even if a model performs well during training, it might fail with real-world data. That's why validation is essential.

This phase tests the model on data it has never seen. The goal is checking if the model learned real patterns or just memorized the training set.

Common practices include:

- Train/Test Splits: Dividing data into separate parts for training and testing

- Cross-Validation: Rotating different data parts for testing to ensure consistency

- A/B Testing: Comparing model performance against current systems in live environments

Research shows that diverse testing data catches problems early. A voice assistant might work in quiet rooms but fail with background noise or different accents. Without broad testing, these issues go undetected.

Machine Learning Process

Step 5: Deployment and Monitoring

After a model passes validation, teams deploy it into the real world. This usually happens through APIs, cloud platforms like AWS SageMaker or Google Cloud AI Platform, or embedded systems.

Modern deployment often uses Docker containers and Kubernetes for scaling. Companies implement shadow mode deployment and canary releases to minimize risks during rollout.

But deployment isn't the end. Once live, new problems emerge:

- Data Drift: Incoming data changes over time. A model trained on last year's behavior might misinterpret today's trends.

- Model Degradation: Prediction quality drops without regular updates.

- Bias Issues: Models might work well overall, but still fail certain groups.

Tesla's Autopilot system shows how continuous monitoring matters. The company constantly collects new driving data and updates its models to handle new scenarios and edge cases.

Ongoing monitoring includes:

- Tracking performance over time

- Collecting user feedback

- Scheduling retraining with fresh data

- Auditing decisions for GDPR compliance and ethical standards

Successful AI systems are living systems. They must evolve with their environments.

Why This Process Matters

Every real-world AI application relies on this structured pipeline. Healthcare systems diagnosing diseases, financial services detecting fraud, and recommendation engines suggesting products all follow these steps and offer powerful benefits.

Companies that skip steps pay the price. Poor data preparation leads to weak performance. Wrong algorithms limit potential. Skipped validation causes production surprises. Failed monitoring can cause real harm.

Teams that build effective AI systems don't rush early steps. They focus on solid foundations, rigorous testing, and thoughtful deployment.

Real-World Use Cases and Examples

Understanding the machine learning pipeline is important, but its value becomes much clearer when we see how real companies apply it to real challenges. Here are examples from healthcare, retail, finance, and beyond — each of them grounded in actual deployments.

Healthcare

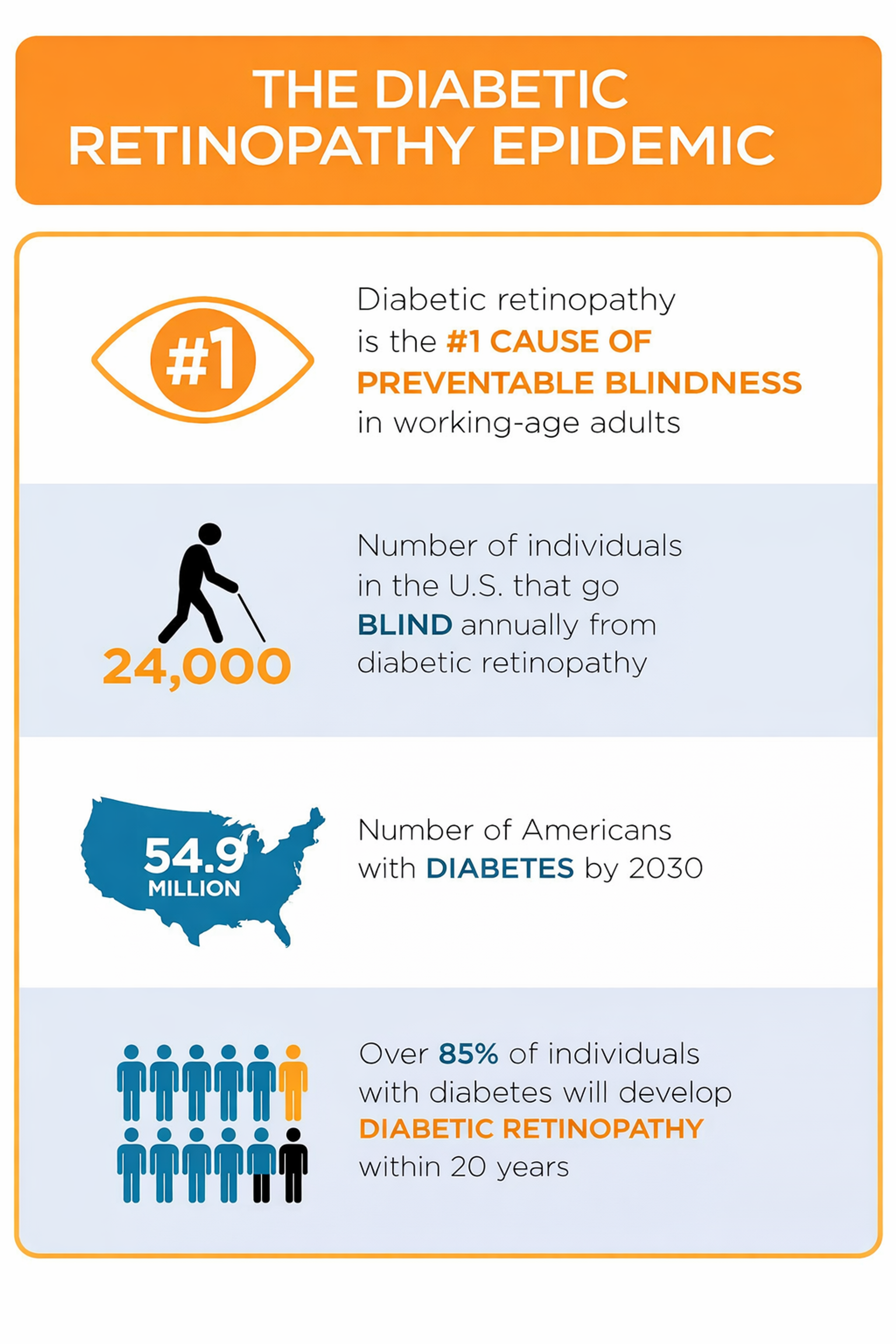

Google Health developed a groundbreaking deep learning system to detect diabetic retinopathy using high-resolution retinal scans collected and labeled by ophthalmologists. These images were preprocessed and standardized before training convolutional neural networks.

Diabetic Retinopathy Screening Primary Care

A prospective study embedded in Thailand’s national screening program found the AI system achieved 94.7% accuracy, 91.4% sensitivity, and 95.4% specificity, matching or exceeding performance of human graders.

Google has licensed this AI model to healthcare partners in India and Thailand, working with institutions like Aravind Eye Hospital and Rajavithi Hospital. The goal is to deliver millions of AI-supported screenings at no cost to patients over the next decade.

Retail

Amazon’s recommendation system is a core driver of its business — estimations suggest that around 35 % of all sales on the platform come through personalized recommendations, both on-site and via email marketing.

The core algorithm — item-to-item collaborative filtering — matches products based on user behavior and is enhanced with hybrid models for more personalized results. These models are deployed across the platform to power homepage suggestions, product carousels, and personalized emails.

Finance

PayPal analyzes millions of transactions daily, training models on extensive historical data — including confirmed fraud cases — to detect subtle anomalies in user behavior. Their fraud detection stack uses gradient‑boosted decision trees and deep learning, enabling up to 10% better real‑time accuracy over traditional methods.

Once deployed, these models assess each transaction in milliseconds, automatically flagging or blocking high‑risk activity while escalating ambiguous cases to human review. PayPal’s AI systems power tools like Fraud Protection Advanced, which delivers dynamic risk scores and real‑time recommendations to merchants.

Language and Communication

DeepL Translator, developed by Germany-based DeepL SE, employs proprietary modifications of the Transformer architecture, trained on millions of aligned bilingual texts sourced from official documents, websites, and their Linguee database. These translation pairs are carefully cleaned and aligned to build high-quality multilingual corpora for model training.

Source: d3.harvard.edu

Logistics

UPS developed ORION (On-Road Integrated Optimization and Navigation) to improve route planning using machine learning. The system processes large volumes of delivery, traffic, and geospatial data to dynamically suggest the most efficient delivery routes for drivers. It’s built on decades of operational insights and is integrated directly into UPS’s daily logistics workflow.

ORION has become one of the most impactful AI deployments in transportation, helping UPS streamline delivery operations at scale.

All of these systems — whether they diagnose illness, recommend products, stop fraud, translate languages, or optimize routes — are built using the same core pipeline:

- Clean, relevant data

- Carefully chosen algorithms

- Rigorous training and validation

- Real-world deployment

- Continuous monitoring and improvement

These aren't just success stories — they're proof that machine learning, when done thoughtfully, opens up new possibilities and drives tangible, scalable impact across industries.

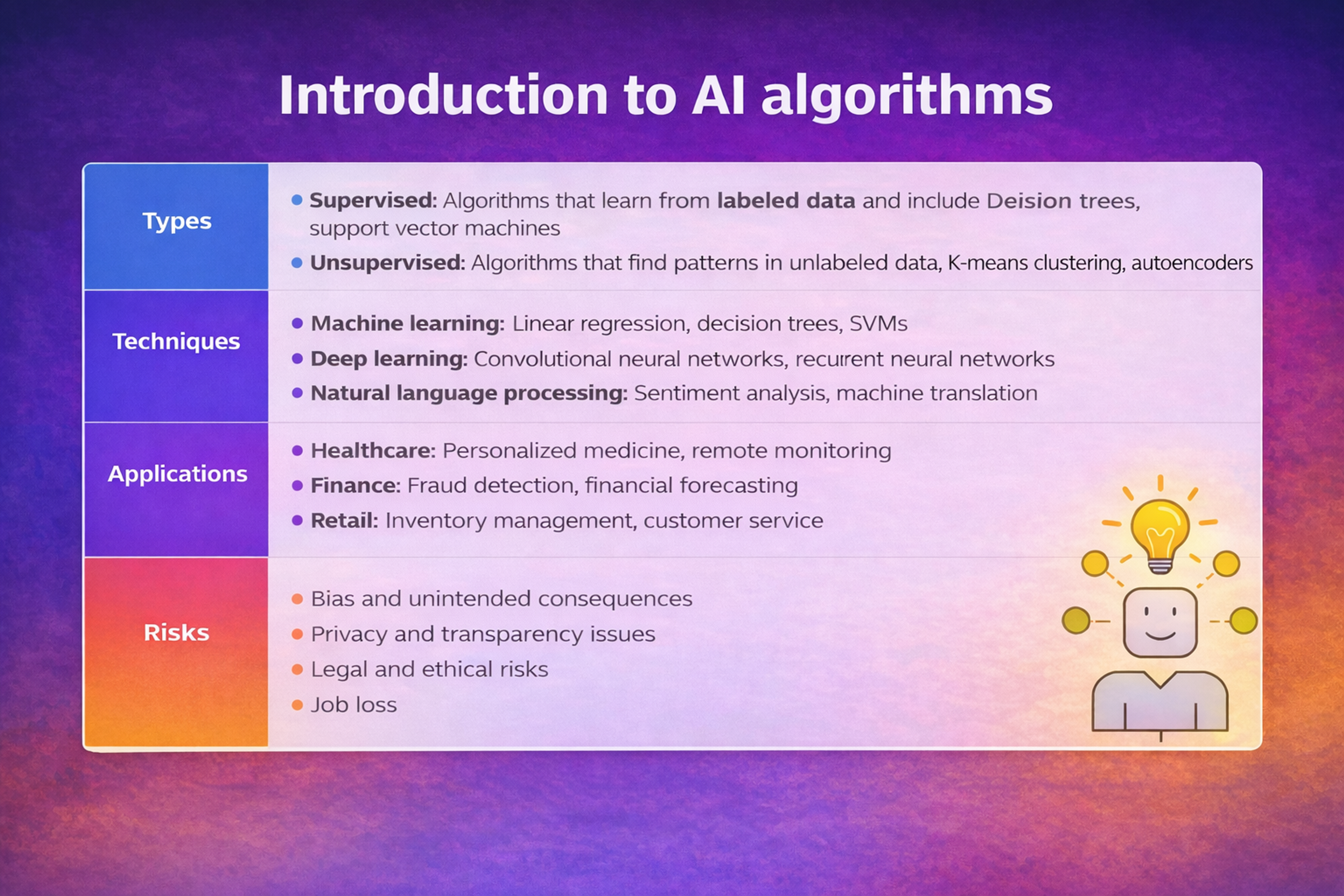

Understanding AI Algorithms

Computer science provides the theoretical and practical foundation for developing AI algorithms, enabling the creation of intelligent systems that can analyze, learn, and make decisions.

Algorithms form the mathematical foundation that makes AI possible. Let’s explore the key types powering today’s intelligent systems.

- Linear regression algorithms predict numerical values based on input features. Real estate websites use these to estimate house prices based on location, size, and amenities. The algorithm finds mathematical relationships between these factors and historical sale prices.

- Decision tree algorithms create branching logic structures that mirror human decision-making. A bank might use decision trees to approve loans, considering factors like credit score, income, and employment history in a systematic way.

- Neural network algorithms simulate simplified versions of biological brain networks. A computer system integrates these algorithms to perform advanced tasks such as sentiment analysis, speech recognition, and natural language understanding, mimicking human cognitive functions. These interconnected processing nodes can recognize complex patterns in images, understand speech, and even generate human-like text.

- Support Vector Machines excel at classification tasks by finding optimal boundaries between different categories. Email providers use these algorithms to separate legitimate messages from spam with high accuracy.

AI Algorithms

The distinction between algorithms and models matters: algorithms are the methods, while models are the trained systems that emerge from applying algorithms to data. You can reuse algorithms across different projects, but each model reflects the specific data it learned from.

Read more:

FAQ

How Much Data Do I Need for Machine Learning?

It depends on the problem. Simple tasks might need hundreds of examples, while complex deep learning models often need thousands or millions.

What Programming Languages Are Best for Machine Learning?

Python is most popular, with libraries like TensorFlow. R is strong for statistics, while JavaScript works for web applications.

How Long Does It Take to Train a Machine Learning Model?

Training time varies widely. Simple models train in minutes, while large deep learning models might take days or weeks.

What Are the Most Common Machine Learning Mistakes?

Poor data quality, wrong algorithm choice, inadequate testing, and lack of monitoring are the biggest pitfalls.

Conclusion

AI isn’t magic — it’s data, math, and smart design. Machine learning finds patterns. Algorithms guide the steps. People keep it safe.

Now when your phone or favorite app uses AI, you’ll know what’s behind it.

This is just the start — and you’re ready to keep up.

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more