AI writes code, diagnoses disease, and shapes what you see online. It also misreads voices, bakes in old biases, and makes up facts. When tech moves faster than the rules meant to guide it, people get hurt, and trust breaks down. AI ethics asks a simple question: how do we build these systems without causing avoidable harm?

The key issues are bias, transparency, accountability, privacy, and safety. Each one needs practical fixes rather than abstract principles. Bias demands diverse data and clear metrics. Transparency requires model cards and plain explanations.

This article shows what those fixes look like in practice. Big tech companies and business leaders play a crucial role in setting the tone for ethical AI practices and ensuring compliance with these standards.

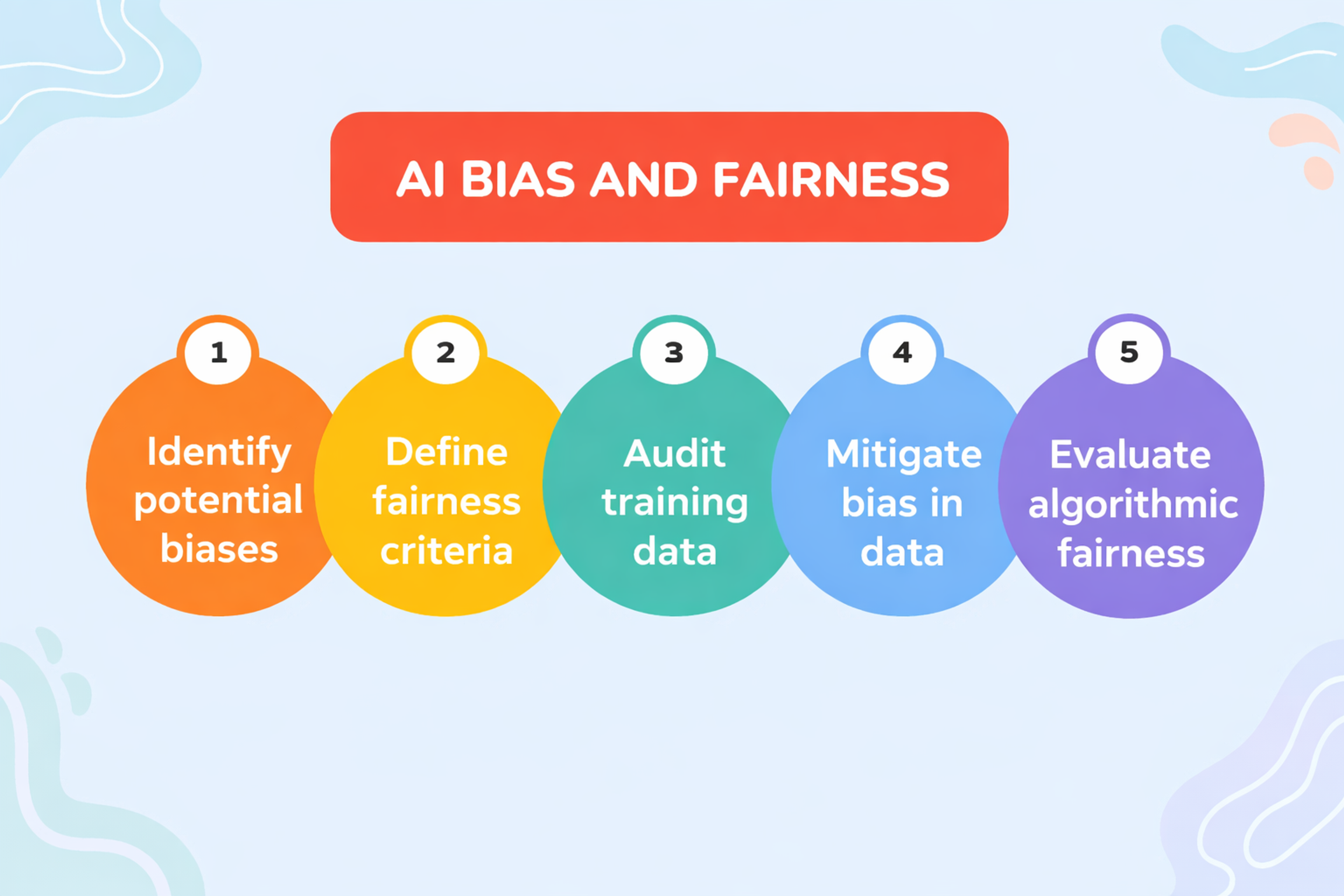

Bias and Fairness

Where Bias Enters

Bias sneaks in through historical data that reflects past inequalities. It comes from rushed labeling by small, similar teams. It hides features that correlate with race or gender. It emerges when deployment conditions drift from training data.

Even a neutral model can produce unfair results if the process around it is biased. Screening résumés in an industry where one group historically lacked access to credentials? The model amplifies the gap.

Why This Matters

Unfair AI decisions block access to real opportunities such as loans, jobs, housing, and medical care. Minor errors become structural harm at scale. Companies face reputation damage fast. Legal consequences follow slower but hit harder.

What Actually Helps

Start with diverse, well-documented datasets. Write data statements that describe where the data came from, who it covers, and what's missing.

Test with metrics broken down by group. Check error rates, false positives, false negatives, and calibration across your serve populations.

Match your fix to the problem. Reweight data when labels are suspect. Adjust decision thresholds when deployment shifts outcomes. Retrain when distributions change.

Bring affected people into testing and feedback loops early.

Fairness has no universal metric. When the stakes are high, it is ethical to name and defend your trade-offs.

AI Bias and Fairness

Transparency and Explainability

Why Systems Stay Opaque

Modern models are complex, and many are proprietary. Both make explanations difficult. Explanations matter most when decisions affect lives, especially in credit, hiring, healthcare, and legal outcomes.

What Transparency Can Mean

Model cards that summarize intended use, training sources, performance across groups, known limits, and unsafe contexts.

Data documentation that records consent, sources, and what you excluded.

Explanation interfaces that show what drove a prediction or offer alternative paths to the same outcome.

Explainability isn't just a technical problem. It's about giving people enough context to contest or opt out. If your explanation doesn't help someone act, it's just theater.

Accountability and Responsibility

“The model did it” isn’t an answer when harm happens. Responsibility spans the whole lifecycle. Private companies, technology companies, and tech giants play a crucial role in ensuring accountability for AI systems, given their influence and reach in developing and deploying these technologies.

Design: What problem are you solving? For whom?

Data: Who collected it? With what consent? What’s missing?

Development: Who approved the release criteria? What guardrails exist?

Deployment: Who monitors drift? Who handles incidents?

Remediation: Who fixes harm? Who compensates affected users?

Many technology companies and the broader private sector have developed their own accountability frameworks, sometimes in collaboration with government officials and business leaders, to address responsibility across the AI lifecycle.

Clear ownership, decision logs, and incident plans turn ethics from aspiration into operation. You don’t have accountability if you can’t name the owner at each stage.

Accountability and Responsibility

Privacy and Surveillance

AI needs data. People need privacy. The tension is real. Data protection and data security are essential components of privacy in AI, ensuring that sensitive information is safeguarded against unauthorized access and cyber threats.

Collection and Secondary Use: Data gathered for one purpose often trains models for another. Ethical practice requires explicit consent for training, data minimization, and strict retention rules.

Re-identification and Leakage: “Anonymous” data can sometimes be re-identified. Generative models can leak sensitive snippets from training sets.

Surveillance Creep: Low-cost sensors and AI inference models make it tempting to monitor people in workplaces, schools, and public spaces. Protecting civil liberties is crucial as AI surveillance expands, to ensure that individual freedoms and human rights are not compromised.

What Reduces Risk: Differential privacy, federated learning, and secure aggregation are privacy-enhancing technologies that help. The European Union's GDPR is a leading example of data protection regulation, setting global standards for privacy and compliance. Strong access controls and human-centered consent also help.

Would a reasonable user be surprised that their data powered this system? If yes, fix the practice or change the consent.

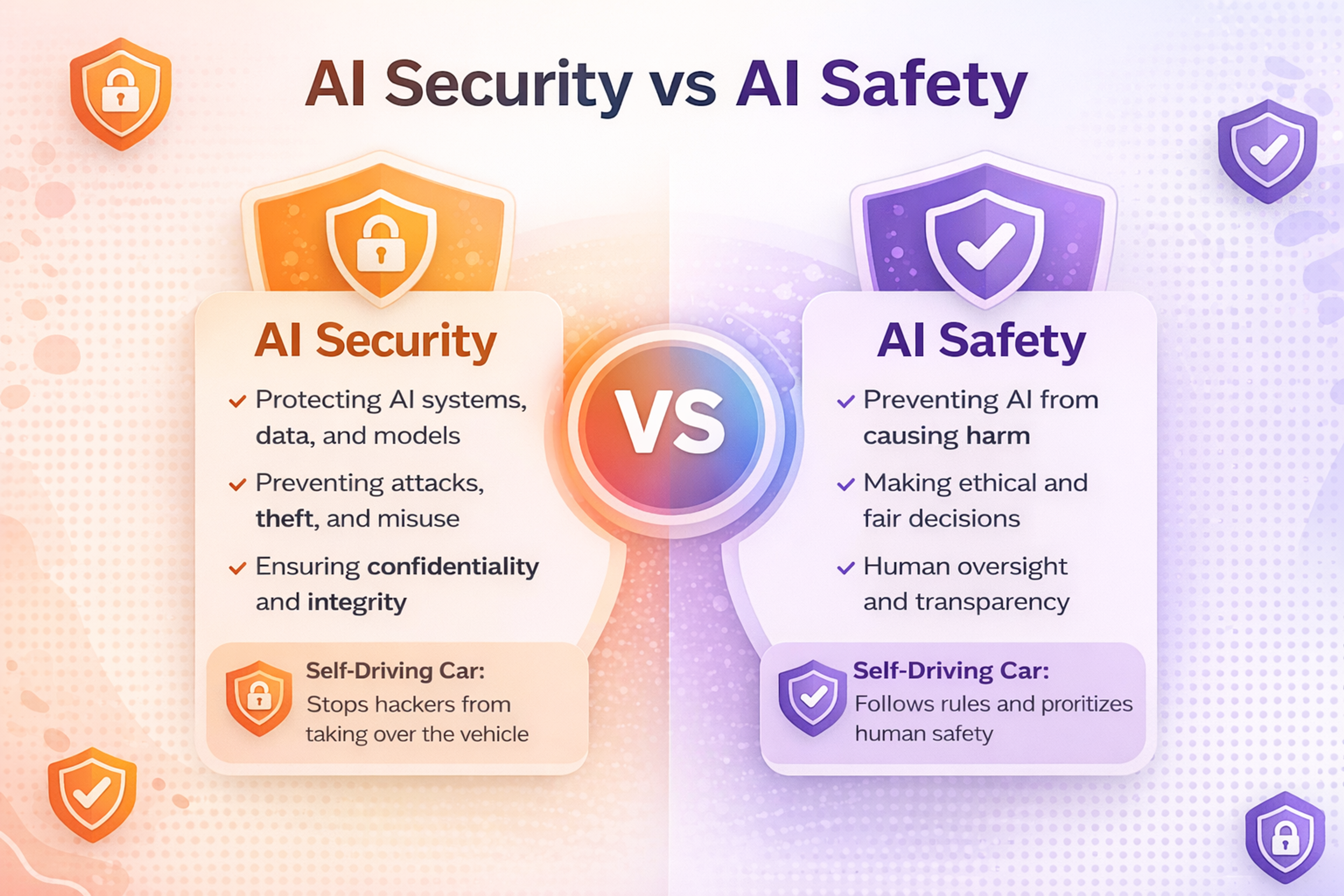

Safety, Security, and Misuse

Ethical AI must be both safe and secure. Identifying and mitigating AI risks and potential risks is essential to ensure ethical, reliable, and trustworthy AI deployment.

Safety is reliability. Does the system behave as expected under stress? For generative models, safety also means reducing harmful outputs, blocking risky instructions, and minimizing hallucinations in high-stakes contexts.

Security is resilience against adversaries. Data poisoning, prompt injection, jailbreaks, model inversion, and theft all pose threats.

Practical steps include pre-deployment red teaming with diverse testers, robust evaluation suites, layered guardrails, and defense-in-depth beyond the model. Implementing responsible AI practices and ethical AI solutions can help address these challenges by promoting transparency, accountability, and risk management throughout the AI lifecycle.

Treat misuse as a design scenario from day one.

AI Safety and security

Human Autonomy and Control

AI should support human judgment, not replace it, especially when rights or safety are at risk. It is crucial that AI systems are designed to respect human rights and protect human life, ensuring that their deployment does not lead to harm or the violation of fundamental freedoms.

The Risks: Automation bias: people over-trust machine outputs when interfaces look authoritative.

What Preserves Control:

- Human-in-the-loop: ensure people can review, override, or decline model decisions when outcomes matter, particularly to safeguard human life and uphold human rights.

- Opt-outs and appeals: give users fundamental ways to contest decisions and request human review.

- It is essential to ensure that AI decisions are ethically acceptable, especially in high-stakes contexts where human life or rights may be impacted.

Design interfaces that encourage skepticism, show uncertainty, reveal the strongest evidence, and make it easy to request more context.

Work, Labor, and Economic Displacement

AI reshapes work. Some tasks disappear, others grow, and new ones emerge. The ethics concern who pays for the transition, not only lost jobs.

Hidden Costs: Annotation, content moderation, and data cleaning often fall on low-paid workers who face psychological risks with poor protections.

Uneven Impact: Displacement hits regions and communities unevenly.

Dignity at Stake: AI powered monitoring erodes trust and autonomy at work. Jobs that require human interaction, including empathy, judgment, and personal engagement, remain valuable and are less likely to be replaced by AI. Leaders should consider this when managing workforce transitions.

An Ethical Response: Impact assessments on labor before rollout. Disclose data-work supply chains. Pay moderators fairly and support their mental health. Fund reskilling pathways early.

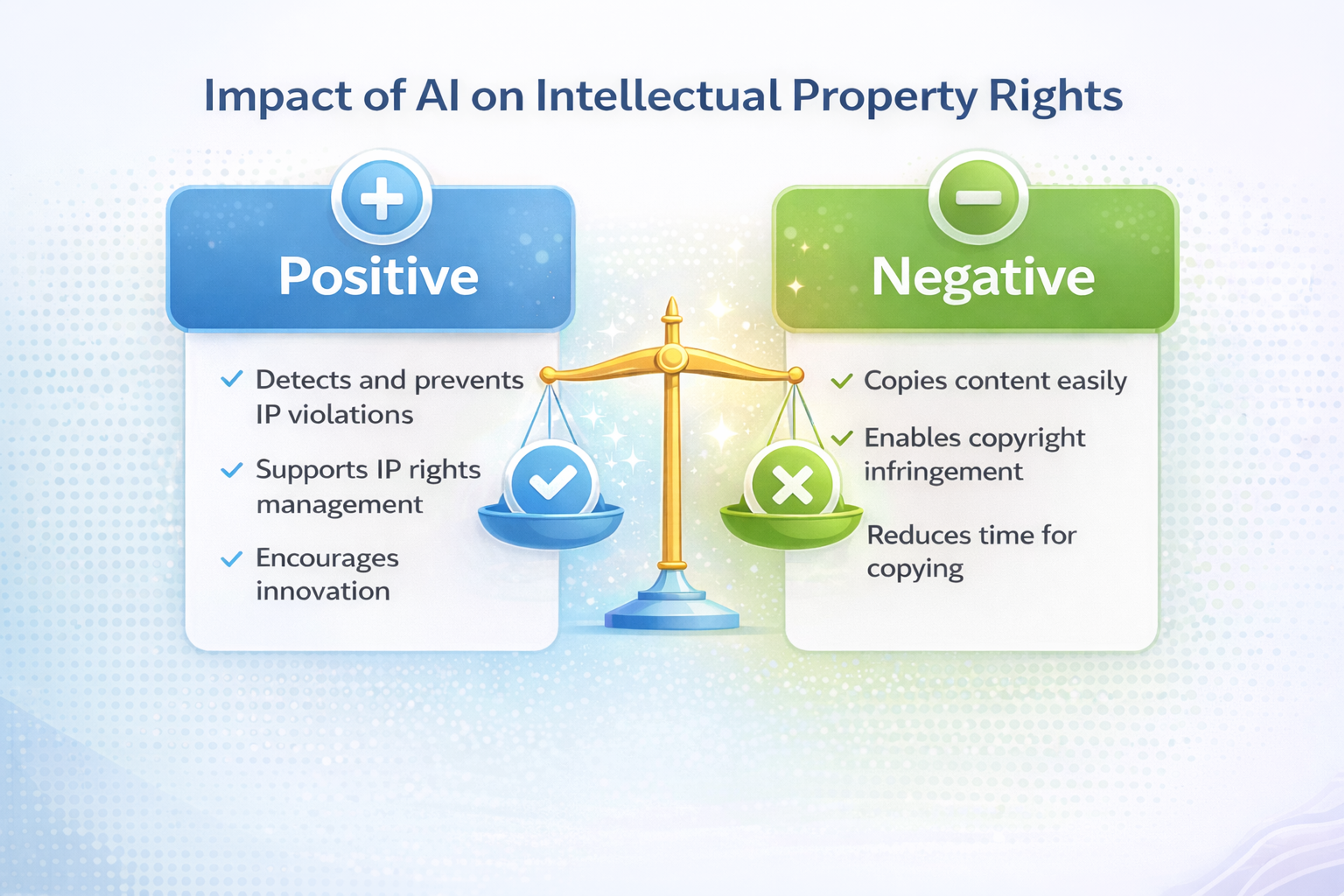

Intellectual Property and Creative Rights

Two tensions define this moment: training on copyrighted or personal material, and generating content that imitates living creators. Increasingly, ai models and ai tools are used to generate content, raising new ethical and legal questions about ownership, attribution, and the rights of original creators.

Training Data: Scraped content may include copyrighted works or private material. When consent is unclear, legitimacy is unclear.

Style and Substitution: Models can imitate distinctive styles. This raises questions about compensation and market harm.

Provenance and Authenticity: As synthetic media floods feeds, creators and audiences need reliable ways to verify origin. Ai technologies now play a dual role, both in creating synthetic media and in developing advanced detection methods to authenticate content and identify manipulation.

Ethical Practice: Prefer consent based datasets. Build opt out registries that actually work. License data transparently where possible. Use content provenance, including watermarking and cryptographic signatures, to track model outputs. Responsible development and deployment of each ai model is essential to address ethical, societal, and environmental concerns.

Impact of AI on Intellectual Property Rights

Misinformation and Societal Trust

Generative AI scales the production of persuasive lies. The risks are evident in elections, markets, and public health. Following ai news is crucial to stay updated on emerging risks, trends, and developments in AI-driven misinformation.

Detection alone will never be perfect. Resilience must also rise: friction for mass-generated content, provenance signals on platforms, and rapid response when harmful narratives spread.

Teams building generative systems should stress-test for misuse, restrict high-risk capabilities, and coordinate with platforms and civil society on response plans.

If your model can mint convincing fake evidence, you must throttle and trace it. To build societal resilience against misinformation, it is essential to promote ai ethics and encourage responsible AI development.

Environmental Impact

Training and serving large models consume significant energy and water. Machine learning and large AI models, in particular, contribute to increased energy and resource consumption due to their need for processing vast datasets and complex computations. Hardware refresh cycles produce e-waste.

Ethics here isn’t guilt. It’s measurement and reduction.

Measure compute, energy source, and water use for training and major inference deployments.

Reduce with efficient architectures, quantization, model reuse, and scheduling workloads to greener grids when feasible.

Design for right-sizing. Most tasks don’t need the largest model.

Include environmental metrics alongside accuracy and latency in decisions. If you don’t measure footprint, you can’t manage it.

Global Justice and Inclusion

AI systems centered on English, Western data, and major markets can marginalize the rest of the world. Intelligent systems must be designed for responsible AI use across diverse global contexts, ensuring that ethical principles, fairness, and accountability are prioritized in every stage of development and deployment.

Language and culture: errors climb for underrepresented languages and dialects.

Access: powerful models may be gated behind costs or policies that exclude low-resource communities.

Epistemic equity: local knowledge and values rarely shape datasets and benchmarks.

Ethical AI means participatory design. Please work with the communities you aim to serve, pay them for their expertise, and include their languages and norms in data and evaluation.

“For” without “with” is a red flag.

AI Principles

Regulation, Standards, and Compliance

Laws and voluntary frameworks are converging on a risk based approach. High risk uses in healthcare, employment, credit, and critical infrastructure face stronger obligations such as documentation, human oversight, robust testing, and post market monitoring.

Government regulation and AI regulation are especially emphasized in high-risk domains like health care, autonomous vehicles, and self-driving cars, where oversight and specialized rules are needed to ensure safety and address societal impacts.

Even when not legally required, aligning with recognized frameworks prepares teams for audits and improves discipline. Emphasizing trustworthy AI, the ethics of AI, and addressing ethical concerns is also essential for meeting compliance requirements and upholding industry standards.

The message is clear: keep records, know your data, justify your use cases, and plan for incidents.

Looking Ahead

Two trends raise the stakes.

First, AI agents with tools and long-term memory will act more autonomously. The growing role of autonomous systems and smart machines in society brings new ethical and practical challenges, especially as these technologies are increasingly integrated into critical decision-making processes. That makes oversight, logging, and sandboxing essential.

Second, dual-use risks grow as models improve at science and engineering tasks. Helpful knowledge can also enable harm. Open publication norms may need new guardrails without choking research. As we look ahead, responsible AI development, ethical AI applications, and the use of AI technology are crucial for ensuring that advancements benefit society while minimizing risks.

The theme is the same in both cases: gradually increase systems’ capability in controlled contexts, with humans ready to intervene.

FAQs

What Are the Four Core Principles of AI Ethics?

The four core principles are fairness (equal treatment across groups), accountability (clear responsibility for outcomes), transparency (understandable systems), and safety (reliable behavior under stress). These principles guide choices about data, models, interfaces, and policies throughout the AI lifecycle.

How Do You Detect Bias in AI Systems?

Detect bias by evaluating systems with group-disaggregated metrics. Check error rates, false favorable rates, false negative rates, and calibration across your serve populations. Run scenario tests that represent actual users. Include affected stakeholders in testing and feedback loops to catch issues that technical metrics miss.

What Makes an AI System Transparent?

Transparency means providing enough context for people to understand how a system affects them. This includes model cards summarizing intended use and limitations, data documentation recording consent and sources, and explanation interfaces showing what drove predictions. Absolute transparency helps people contest decisions or opt out.

Read more:

Conclusion

Ethical AI is not a one time checklist. It is a discipline you build, with habits, structures, and accountability that follow every project from idea to retirement.

The core questions stay the same: Is this fair? Who is responsible? Do people understand and give consent? Is it safe from error and attack? Who gains, and who pays the cost?

Teams that answer with evidence, not feelings, earn trust. Trust is the one input every intelligent system needs to do real good.

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more