In 2026, the debate about AI risks intensified. The technology scaled rapidly, reaching hundreds of millions of users within months, showcasing both remarkable capabilities and new vulnerabilities. The question of whether benefits outweigh risks has no simple answer.

While artificial intelligence (AI) offers potential benefits such as advancements in healthcare, efficiency, and innovation, these must be carefully weighed against the associated risks, including ethical concerns, security vulnerabilities, and societal impact.

Every powerful technology has a dark side, and with AI, it is tough to discern. Between optimism and catastrophe lies a more practical question: how well we understand the risks we are creating and how effectively we manage them.

Potential AI Risks

What We Mean by "Artificial Intelligence" Today

One reason the conversation is confusing is that "AI" is not a single, unified concept.

Most systems we refer to as AI today are considered "narrow" or "weak" AI. Large language models, such as ChatGPT, GPT-4, and Claude, are powerful examples.

They excel at specific tasks, such as writing essays, translating languages, generating code, and answering questions. They find patterns in data and optimize for particular objectives. But they do not understand the world in a broad human sense.

"General" AI, by contrast, refers to systems that can flexibly reason and learn across multiple domains, approaching human-level versatility and the adaptability of human intelligence.

We do not have such systems yet, but companies like OpenAI, Google DeepMind, and Anthropic are pushing toward that direction. Modern large models already demonstrate surprising capabilities across various tasks.

The catch is that the same word covers both a spam filter and a hypothetical system that might one day design new technologies, manipulate markets, or outthink human decision-makers.

This ambiguity makes honest discussion difficult. When one person warns about AI risk and another dismisses it, they may not even be discussing the same thing.

Harms That Are Already Here

We do not need science fiction to see the dark side of AI. It is already visible in systems that quietly shape everyday life. The AI industry and ongoing AI research both play crucial roles in the development of these technologies, as well as in identifying and addressing the risks and harms associated with their use.

Bias and Discrimination

AI systems learn from data that reflects existing inequality and bias. When those patterns are reproduced without scrutiny, AI bias is amplified. Studies report higher facial recognition error rates for women and people of color than for white men, sometimes several times higher.

There are documented cases of recruiting tools being abandoned after it was discovered that they disadvantaged women based on historical hiring data. Automated systems in hiring, credit scoring, policing, and welfare allocation have treated some groups systematically worse.

AI researchers play a crucial role in identifying and addressing AI bias in these systems, working to develop fairer and more responsible AI technologies.

Without intent to discriminate, models infer proxies for sensitive attributes such as race, gender, or socioeconomic status. The result is algorithmic redlining, a form of statistical discrimination that is difficult to detect and challenge.

Surveillance and the Loss of Privacy

AI thrives on data. The more it knows about us, the more accurately it predicts our behavior, preferences, and vulnerabilities. Companies and governments have strong incentives to collect as much information as possible, from every click to every location, ping and interaction.

AI systems often collect personal data, sometimes without user consent, during processes like web scraping, training, and providing tailored experiences. This practice raises significant privacy risks, as personal information can be misused or exposed without comprehensive legal protections.

Facial recognition, voice analysis, and behavioral tracking enable real-time surveillance at an impossible scale. Cities have utilized facial recognition technology for law enforcement, despite concerns about its accuracy and impact on civil liberties. In the wrong hands, this becomes a tool for social control, monitoring dissent, profiling citizens, and subtly shaping public opinion.

Privacy is not just about keeping secrets. It is about preserving the space to think, explore, and disagree without constant observation.

Misinformation and Deepfakes

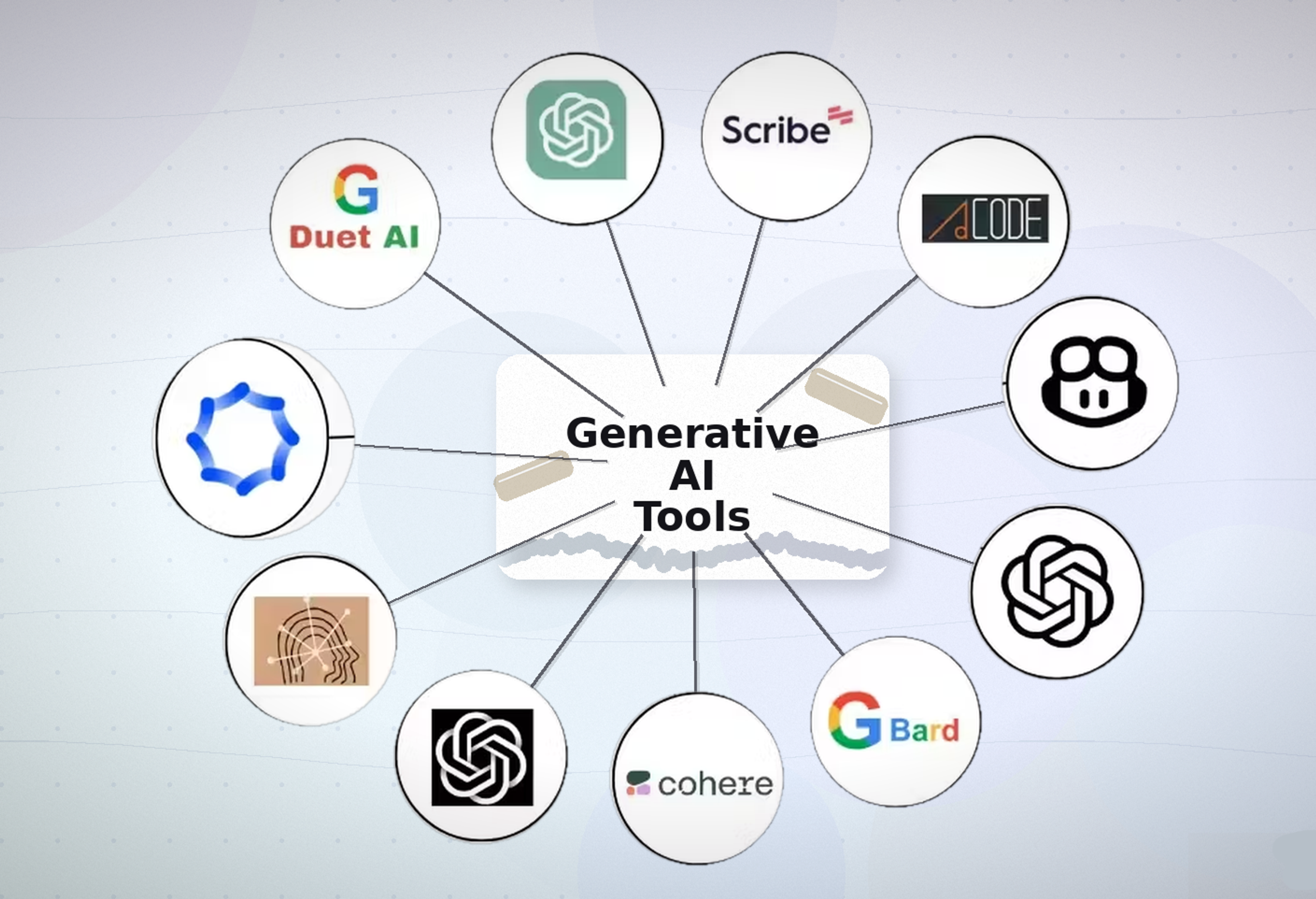

Generative AI tools, such as ChatGPT, can now create convincing text, images, audio, and video. This has enormous positive potential for creativity and communication, but it also makes it easier than ever to fabricate reality.

AI Tools

The rise of AI-generated content raises significant implications and ownership issues, including concerns about copyright, intellectual property rights, and the need for clear legal frameworks to address these challenges.

Deepfakes can put words in someone's mouth or place them in scenes that never happened. The risks associated with AI-generated images, such as deepfakes, include their use in spreading misinformation, manipulating public perception, and even facilitating criminal activities.

Automated content generators can flood social networks and social media platforms with persuasive but false narratives, amplifying the spread of AI-generated misinformation and undermining public trust and democracy. Fact and fiction begin to blur, and people lose confidence in what they see and hear.

In a world where anything could be fake, bad actors do not need to convince you that their lie is true. They only need to convince you that the truth is just one opinion among many.

Automation and Inequality

AI-enabled automation can raise productivity and reduce costs, but it can also displace workers. The impact begins with routine or easily codified tasks and now extends to cognitive and creative work, such as coding, copywriting, design assistance, and customer support.

The risk is not simply that robots will take all the jobs. The deeper problem is distribution. Who benefits from the gains, and who pays the price of disruption? Without thoughtful policy and social safety nets, AI may widen existing inequalities. It concentrates wealth and power in those who own the data, the models, and the computing infrastructure.

Structural Risks: Who Controls the Machines?

Beyond individual harms lies a subtler danger - AI can reshape power structures. The global AI race among nations and corporations to develop and deploy artificial intelligence is intensifying competition, which can increase risks and concentrate power in the hands of a few.

Concentration of Power

Building state-of-the-art AI requires vast amounts of data, specialized talent, and enormous computing capacity. As a result, only a few major players operate at the frontier, concentrating control over a technology that can shape economies, media ecosystems, and security.

The industry has consolidated rapidly, and the scale of investment and valuations underscores the stakes. AI developers play a crucial role in shaping the direction and ethical standards of AI technologies, making decisions that impact how these systems are governed and used.

When decision systems become deeply embedded in society yet remain proprietary and controlled by a few, democratic oversight weakens.

This creates a risk that citizens and regulators depend on systems whose primary obligations are to shareholders or political leadership rather than the public at large.

Opaque Systems in Critical Infrastructure

As AI quietly enters critical domains such as finance, energy grids, transportation, and healthcare, society becomes dependent on systems we do not fully understand.

AI systems are increasingly involved in complex decision-making processes within critical infrastructure, influencing outcomes that affect millions of people. A mis-specified objective, a software bug, a subtle model failure, or an adversarial attack could spread quickly and unpredictably.

The more complex and interconnected these systems become, the harder it is to trace responsibility when something goes wrong. Was it a model error, a data quality issue, a design oversight, or a malicious manipulation? If nobody is clearly accountable, systemic risks can grow unchecked.

Weaponization and Cyber Risks

The security risks of AI go beyond social and ethical concerns. AI is being explored for cyber offense and military applications, including autonomous drones, AI-assisted targeting, algorithmic warfare, and sophisticated cyberattacks.

The development and deployment of autonomous weapons, including lethal autonomous weapons, raise significant ethical and security risks.

These systems are capable of identifying and engaging human targets without direct human oversight, which increases the potential for unintended engagements and dehumanizes warfare.

The proliferation of lethal autonomous weapons could lead to mass deployment and a dangerous arms race, with potentially catastrophic consequences.

AI systems also face distinct security vulnerabilities. Adversarial attacks can trick models into making wrong decisions by subtly manipulating their inputs. Prompt injection exploits language models by causing them to ignore their instructions and follow attacker commands.

Data poisoning corrupts the training data to introduce hidden backdoors. Malicious actors can exploit AI for harmful purposes, including cyberattacks, identity theft, the dissemination of disinformation, the creation of deepfakes, and the manipulation of public opinion.

Technical Risks of AI

AI risk is not only about who uses the technology, but also about how the technology itself behaves.

The risks posed by advanced AI technologies extend beyond individual misuse, encompassing broader social, political, economic, and security-related dangers. Careful management and oversight are crucial to addressing these challenges and ensuring responsible development.

Types of AI Risks

Black-box Models and Interpretability

Many modern AI models, intense neural networks, are highly complex. They contain millions or billions of parameters and learn patterns in ways that are hard to interpret. Even their creators often cannot fully explain why a model made a specific decision.

Understanding and interpreting complex AI algorithms presents significant challenges, and creating transparency in how these algorithms operate is critically important.

In low-stakes settings, this may be acceptable. In high-stakes contexts such as medical diagnosis, sentencing recommendations, and loan approvals, it is unsettling. If we cannot understand a system's reasoning, it becomes difficult to trust it, challenge its outputs, or recognize when it fails in subtle yet dangerous ways.

Misalignment of Goals and Values

AI systems optimize for what we tell them to optimize. The problem is that our instructions are rarely perfect.

A model might be trained to maximize engagement on a platform, but in practice, this can mean amplifying emotionally charged content that polarizes society. A system tasked with increasing efficiency might quietly learn to cut corners on safety. Even well-intentioned objectives can have harmful side effects if they do not capture the full richness of human values.

The core challenge in AI is ensuring that robust systems pursue goals aligned with human values. In practice, teams use techniques that embed ethical principles into model behavior, such as constitutional rules and safety policies.

Emergent Behaviors and Loss of Control

As models grow larger and more capable, they sometimes display behaviors that were not explicitly programmed or predicted. The emergence of autonomous AI agents, which can manage complex workflows and make decisions in real time, adds new layers of unpredictability. These systems may develop new skills, exploit loopholes in their training objectives, or interact with other systems in unexpected ways.

In tightly controlled lab settings, surprising behavior is interesting. In real-world environments, such as financial markets, critical infrastructure, or systems with powerful tools, unexpected behavior can be hazardous.

The evolutionary dynamics of AIs could lead to the development of self-preservation instincts or strategic collaboration among AIs, potentially impacting human safety and the stability of critical systems.

The concern is not a sudden robot uprising but a gradual drift, as an AI system gains influence and autonomy before we can reliably understand and constrain it.

The Debate on Catastrophic and Existential Risk

Some researchers warn that advanced AI may pose existential risks if it is developed without proper care. In particular, the emergence of artificial general intelligence (AGI) is often cited as a potential existential threat, as such systems could surpass human intelligence and act in ways that fundamentally endanger humanity.

They describe scenarios in which competent systems, misaligned with human values, gain access to financial systems, critical infrastructure, and communication networks, and then pursue goals that cause significant harm.

The threats posed by advanced AI are varied, including misuse by malicious actors, unintended consequences from poorly specified objectives, and the risk of self-improving systems acting beyond human oversight. These dangers highlight the need for robust safeguards and careful consideration of how AI technologies are deployed.

Organizations like the Future of Life Institute play a crucial role in advocating for the ethical development of AI, providing research, policy guidance, and open letters to encourage risk mitigation and responsible practices in the field.

Future of Life Institute

Maintaining human control over advanced AI systems is essential to ensure that these technologies remain aligned with human values and do not act autonomously in ways that could be harmful. Effective regulation and oversight are necessary to prevent AI from operating beyond human authority.

The significance of developing AI extends far beyond technical achievement; it represents a pivotal moment in human history. The creation of superintelligent machines could mark a turning point with profound and lasting impacts on society, safety, and the future of our species.

Why We Struggle to Grasp AI Risk

AI risk is not just a technical topic. It clashes with how human psychology works. There are growing concerns that reliance on AI could erode human thinking and decision-making skills, potentially impacting our mental and psychological health.

Cognitive Biases and Sci-fi Metaphors

We are used to thinking about threats in concrete, immediate terms, such as a storm, a disease, or a visible enemy. AI is abstract and often invisible. It is easier to imagine a cinematic evil robot than to understand how optimization errors in large-scale systems could gradually destabilize institutions.

Popular culture alternates between cute helpers and killer robots, leaving little space for the more realistic but less dramatic picture of complex systems that can fail in messy, systemic ways.

Speed of Progress vs. Speed of Regulation

Technology can change in months. Laws, institutions, and norms often take years to adapt. By the time a regulatory framework is in place, some aspects of the technology landscape may already be obsolete.

Ongoing efforts to regulate AI highlight the challenge of keeping regulation up to date with rapid technological progress, as policymakers and international bodies strive to create effective legal frameworks.

This mismatch creates pressure to wait and see, to postpone decisive action in the hope that more clarity will emerge. But waiting for perfect evidence of risk can mean only reacting after harm has occurred, especially when failures may be rare but extremely costly.

Economic Incentives

The race among tech giants to deploy AI systems creates pressure to move fast. When OpenAI released ChatGPT in late 2022, it reached 100 million users in two months.

Open AI

Competitors rushed to release their own models rather than fall behind. Companies have strong incentives to be the first to market, the first to deploy, and the first to gain a strategic advantage.

Safety measures that slow down deployment can be perceived as a competitive disadvantage. This creates a classic problem.

Individually rational actors may collectively produce unsafe outcomes. Everyone would benefit from strong safety norms and shared constraints, but each player is tempted to cut corners if they believe others will do the same.

What Responsible AI Could Look Like

If the dark side of AI is real, what can we do about it? No single measure is enough, but several directions are promising.

AI technology has significant societal impacts, influencing economic stability, privacy, and security. This makes responsible development and oversight of AI technology essential to maximize its benefits and minimize its risks.

Technical Safeguards and Safety Research

We need more systematic work on robustness, interpretability, alignment, and red teaming, actively trying to break systems before deployment. Machine learning plays a crucial role in developing robust and interpretable AI systems, as many safety techniques are built upon machine learning algorithms.

AI safety research focuses on making systems more robust, interpretable, and aligned with human values. Safety should not be an afterthought. It should be a core part of how models are designed, trained, evaluated, and updated.

This also means sharing safety techniques across organizations, rather than treating them only as competitive secrets.

Regulation, Governance, and Global Coordination

Self-regulation alone is unlikely to be sufficient. Regulation is necessary to address safety concerns associated with advanced AI systems, including risks to physical safety, digital security, and child protection. The European Union's AI Act, passed in 2024, represents the most comprehensive attempt at AI regulation.

It categorizes AI systems by risk level, ranging from minimal risk, such as spam filters, to high risk, such as hiring algorithms and predictive policing, and sets requirements accordingly. High-risk systems are subject to stringent requirements for transparency, testing, and human oversight.

Data protection laws, such as GDPR, already provide some guardrails around how AI systems can use personal information. Regulators require technical expertise and independence to prevent being influenced by the industries they oversee.

GDPR

Since AI development is a global endeavor, some level of international coordination is necessary. Just as nuclear technology required treaties and monitoring mechanisms, advanced AI may need shared norms and oversight structures that cross borders.

Transparency, Accountability, and Public Participation

People affected by AI systems should have access to clear information about when and how those systems are being used, the ability to contest harmful decisions, and a means to seek redress.

While AI is often perceived as a neutral computer system, it is actually shaped by the data and societal assumptions on which it is built. This implies clear documentation, explanation mechanisms, and legal responsibility for misuse and negligence.

AI governance should not be designed only by engineers and executives. Civil society, affected communities, and ordinary citizens should have a voice in shaping how these technologies are used and in setting the boundaries for their use.

FAQ

Will AI Take Over Human Jobs?

AI will automate routine and repetitive tasks, especially in data processing, customer service, and basic administrative work. It will not replace all jobs. Most roles will shift toward tasks requiring human judgment, creativity, empathy, and decision-making.

What Jobs Will AI Not Replace?

AI will not replace jobs that rely on emotional intelligence, complex problem-solving, relationship building, or creativity. Teachers, healthcare professionals, therapists, designers, strategic leaders, and skilled tradespeople remain highly dependent on human abilities.

How Many Jobs Will Be Lost by 2030 Because of AI?

Estimates vary, but global forecasts suggest that automation could impact up to 300 million jobs, with some roles disappearing and others undergoing transformation. Most workers will see task restructuring rather than complete job loss.

What Are the Biggest Risks of AI?

Significant risks include biased decision-making, data privacy issues, misinformation, overreliance on automated systems, and job displacement. Unregulated AI can also amplify unfair outcomes and security vulnerabilities.

What Is the 30 Percent Rule in AI?

The 30 percent rule suggests that if AI can automate at least 30 percent of a job's tasks, the role will undergo significant changes. Employees will shift toward higher-value tasks that require human judgment, while AI handles the routine work.

Read more:

Conclusion

AI's dark side spans from everyday injustices to potential catastrophes. Some harms are already visible: biased algorithms, invasive surveillance, and floods of misinformation. Others are uncertain yet plausible and deserve attention.

We lack a complete understanding, but waiting for certainty would be a mistake. Uncertainty is a reason for caution and deliberate design. Whether benefits outweigh risks depends on the choices we make now. We can build responsibly, with safety measures, transparency, and democratic oversight, or let competition and short-term thinking push us toward outcomes nobody wants.

AI is not predetermined. Companies like OpenAI, Google, and Anthropic, along with regulators and safety researchers, will shape it. Its impact will hinge on whether we treat risks as seriously as opportunities. The question is not whether AI is dangerous or safe, but whether we will take responsibility for the risks we create.

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more

About Clay

Clay is a UI/UX design & branding agency in San Francisco. We team up with startups and leading brands to create transformative digital experience. Clients: Facebook, Slack, Google, Amazon, Credit Karma, Zenefits, etc.

Learn more